【项目011】基于AlexNet的蝴蝶分类(教学版) 学生版 | 返回首页

作者:欧新宇(Xinyu OU)

当前版本:Release v1.0

开发平台:Paddle 2.3.2

运行环境:Intel Core i7-7700K CPU 4.2GHz, nVidia GeForce GTX 1080 Ti

本教案所涉及的数据集仅用于教学和交流使用,请勿用作商用。

最后更新:2022年10月11日

【实验说明】

一、实验目的

- 学会基于Paddle2.1+版实现卷积神经网络

- 学会自己设计AlexNet的类结构,并基于AlexNet模型进行训练、验证和推理

- 学会对模型进行整体准确率测评和单样本预测

- 学会使用logging函数进行日志输出和保存

- 熟练函数化编程方法

二、实验要求

- 按照给定的网络体系结构图设计

卷积神经网络 - 使用训练集训练模型,并在训练过程中输出

验证集精度 - 使用训练好的模型在测试集上输出

测试精度 - 对给定的测试样本进行预测,输出每一个样本的

预测结果及该类别的概率。 - 尽力而为地在测试集上获得最优精度**(除网络模型不能更改,其他参数均可修改)**

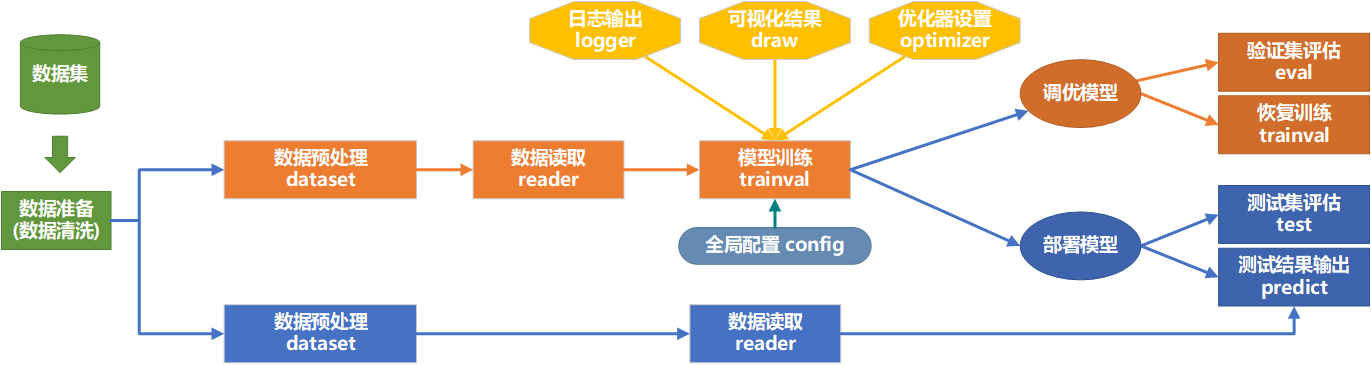

三、代码逻辑结构图

四. 目录结构

- 本项目目录结构说明(可根据实际情况进行修改)

- 数据集根目录:D:\WorkSpace\ExpDatasets\Gestures

- 结果保存目录:D:\WorkSpace\ExpResults\Project011AlexNetButterfly

- 结果部署目录:D:\WorsSpace\ExpDeployments\Project011AlexNetButterfly

- 调优模型路径:checkpoint_models

- 部署模型路径:final_models

- 可视化结果路径:final_figures

- 日志路径:logs

【实验一】 数据集准备(Project04)

实验摘要: 对于模型训练的任务,需要数据预处理,将数据整理成为适合给模型训练使用的格式。蝴蝶识别数据集是一个包含有7个不同种类619个样本的数据集,不同种类的样本按照蝴蝶的类别各自放到了相应的文件夹。不同的样本具有不同的尺度,但总体都是规整的彩色图像。

实验目的:

- 学会观察数据集的文件结构,考虑是否需要进行数据清理,包括删除无法读取的样本、处理冗长不合规范的文件命名等

- 能够按照训练集、验证集、训练验证集、测试集四种子集对数据集进行划分,并生成数据列表

- 能够根据数据划分结果和样本的类别,生成包含数据集摘要信息下数据集信息文件

dataset_info.json - 能简单展示和预览数据的基本信息,包括数据量,规模,数据类型和位深度等

1.0 处理数据集中样本命名的非法字符

原始的数据集的名字有可能会存在特殊的命名符号,从而导致在某些情况下无法正确识别。因此,可以通过批量改名的重命名方式来解决该问题。通过观察,本数据集相对规范,不需要进行数据清洗。

1.1 生产图像列表及类别标签

Q1: 补全下列代码,实现将数据集按照7:1:2的比例划分为为训练集train,验证集val,测试集test和训练验证集trainval。(10分)[Your codes 1~3]

################################################################################## # 数据集预处理 # 作者: Xinyu Ou (http://ouxinyu.cn) # 数据集名称:蝴蝶识别数据集 # 数据集简介: Butterfly蝴蝶数据集包含7个不同种类的蝴蝶。 # 本程序功能: # 1. 将数据集按照7:1:2的比例划分为训练验证集、训练集、验证集、测试集 # 2. 代码将生成4个文件:训练验证集trainval.txt, 训练集列表train.txt, 验证集列表val.txt, 测试集列表test.txt, 数据集信息dataset_info.json # 3. 代码输出信息:图像列表已生成, 其中训练验证集样本490,训练集样本423个, 验证集样本67个, 测试集样本129个, 共计619个。 # 4. 生成数据集标签词典时,需要根据标签-文件夹列表匹配标签列表 ################################################################################### import os import json import codecs # Q1-1: 补全下列代码,实现数据集相关信息的初始化 # [Your codes 1] num_trainval = 0 num_train = 0 num_val = 0 num_test = 0 class_dim = 0 dataset_info = { 'dataset_name': '', 'num_trainval': -1, 'num_train': -1, 'num_val': -1, 'num_test': -1, 'class_dim': -1, 'label_dict': {} } # 本地运行时,需要修改数据集的名称和绝对路径,注意和文件夹名称一致 dataset_name = 'Butterfly' dataset_path = 'D:\\Workspace\\ExpDatasets\\' dataset_root_path = os.path.join(dataset_path, dataset_name) excluded_folder = ['.DS_Store', '.ipynb_checkpoints'] # 被排除的文件夹 # 定义生成文件的路径 data_path = os.path.join(dataset_root_path, 'Data') trainval_list = os.path.join(dataset_root_path, 'trainval.txt') train_list = os.path.join(dataset_root_path, 'train.txt') val_list = os.path.join(dataset_root_path, 'val.txt') test_list = os.path.join(dataset_root_path, 'test.txt') dataset_info_list = os.path.join(dataset_root_path, 'dataset_info.json') # 检测数据集列表是否存在,如果存在则先删除。其中测试集列表是一次写入,因此可以通过'w'参数进行覆盖写入,而不用进行手动删除。 if os.path.exists(trainval_list): os.remove(trainval_list) if os.path.exists(train_list): os.remove(train_list) if os.path.exists(val_list): os.remove(val_list) if os.path.exists(test_list): os.remove(test_list) # Q1-2: 补全下列代码,实现将数据集按照7:1:2的比例进行划分,并将划分结果分别写入四个数据子集列表中 # [Your codes 2] class_name_list = os.listdir(data_path) with codecs.open(trainval_list, 'a', 'utf-8') as f_trainval: with codecs.open(train_list, 'a', 'utf-8') as f_train: with codecs.open(val_list, 'a', 'utf-8') as f_val: with codecs.open(test_list, 'a', 'utf-8') as f_test: for class_name in class_name_list: if class_name not in excluded_folder: dataset_info['label_dict'][str(class_dim)] = class_name # 按照文件夹名称和label_match进行标签匹配 images = os.listdir(os.path.join(data_path, class_name)) count = 0 for image in images: if count % 10 == 0: # 抽取大约10%的样本作为验证数据 f_val.write("{0}\t{1}\n".format(os.path.join(data_path, class_name, image), class_dim)) f_trainval.write("{0}\t{1}\n".format(os.path.join(data_path, class_name, image), class_dim)) num_val += 1 num_trainval += 1 elif count % 10 == 1 or count % 10 == 2: # 抽取大约20%的样本作为测试数据 f_test.write("{0}\t{1}\n".format(os.path.join(data_path, class_name, image), class_dim)) num_test += 1 else: f_train.write("{0}\t{1}\n".format(os.path.join(data_path, class_name, image), class_dim)) f_trainval.write("{0}\t{1}\n".format(os.path.join(data_path, class_name, image), class_dim)) num_train += 1 num_trainval += 1 count += 1 class_dim += 1 # Q1-3: 补全下列代码,实现将数据信息按照规范格式保存至json文件中 # [Your codes 1] dataset_info['dataset_name'] = dataset_name dataset_info['num_trainval'] = num_trainval dataset_info['num_train'] = num_train dataset_info['num_val'] = num_val dataset_info['num_test'] = num_test dataset_info['class_dim'] = class_dim with codecs.open(dataset_info_list, 'w', encoding='utf-8') as f_dataset_info: json.dump(dataset_info, f_dataset_info, ensure_ascii=False, indent=4, separators=(',', ':')) # 格式化字典格式的参数列表 print("图像列表已生成, 其中训练验证集样本{},训练集样本{}个, 验证集样本{}个, 测试集样本{}个, 共计{}个。".format(num_trainval, num_train, num_val, num_test, num_train+num_val+num_test)) dataset_info = json.dumps(dataset_info, indent=4, ensure_ascii=False, sort_keys=False, separators=(',', ':')) # 格式化字典格式的参数列表 print(dataset_info)

图像列表已生成, 其中训练验证集样本490,训练集样本423个, 验证集样本67个, 测试集样本129个, 共计619个。

{

"dataset_name":"Butterfly",

"num_trainval":490,

"num_train":423,

"num_val":67,

"num_test":129,

"class_dim":7,

"label_dict":{

"0":"admiral",

"1":"black_swallowtail",

"2":"machaon",

"3":"monarch_closed",

"4":"monarch_open",

"5":"peacock",

"6":"zebra"

}

}

【实验二】 全局参数设置及数据基本处理

实验摘要: 蝴蝶种类识别是一个多分类问题,我们通过卷积神经网络来完成。这部分通过PaddlePaddle手动构造一个Alexnet卷积神经的网络来实现识别功能。本实验主要实现训练前的一些准备工作,包括:全局参数定义,数据集载入,数据预处理,可视化函数定义,日志输出函数定义。

实验目的:

- 学会使用配置文件定义全局参数

- 学会设置和载入数据集

- 学会对输入样本进行基本的预处理

- 学会定义可视化函数,可视化训练过程,同时输出可视化结果图和数据

- 学会使用logging定义日志输出函数,用于训练过程中的日志保持

2.1 导入依赖及全局参数配置

在进行全局参数配置的时候,有时我们也会获取系统硬件信息来帮助我们判断如何对初始超参数进行设置。本项目也提供了类似的方法:源代码:getSystemInfo.py

Q2: 根据需求补全下列代码(10分)[Your codes 4~5]

要求:

1. 配置数据集的基本信息

2. 定义合适的训练方法

3. 训练结果自动保存至 结果目录/<项目名称>/,包括checkpoint模型路径、最终模型路径、可视化图表路径和日志路径。

4. 验证和推理需要从部署路径 部署目录/<项目名称>/ 中进行读取,基本内容和训练结果一致

#################导入依赖库################################################## import os import sys import json import codecs import numpy as np import time # 载入time时间库,用于计算训练时间 import paddle import matplotlib.pyplot as plt # 载入python的第三方图像处理库 from pprint import pprint sys.path.append(r'D:\WorkSpace\DeepLearning\WebsiteV2\Notebook\Projects') # 导入自定义函数保存位置 from utils.getSystemInfo import getSystemInfo # 载入自定义系统信息函数 from utils.getVisualization import draw_process # 载入自定义可视化绘图函数 from utils.getOptimizer import learning_rate_setting, optimizer_setting # 载入自定义优化函数 from utils.getLogging import init_log_config # 载入自定义日志保存函数 from utils.models.AlexNet import AlexNet # 载入自定义的AlexNet模型 ################全局参数配置################################################### #### 1. 训练超参数定义 # Q2-1: 补全数据集和优化器基本参数 # [Your codes 4] train_parameters = { 'project_name': 'Project011AlexNetButterfly', 'dataset_name': 'Butterfly', 'architecture': 'Alexnet', 'training_data': 'train', 'starting_time': time.strftime("%Y%m%d%H%M", time.localtime()), # 全局启动时间 'input_size': [3, 227, 227], # 输入样本的尺度 'mean_value': [0.485, 0.456, 0.406], # Imagenet均值 'std_value': [0.229, 0.224, 0.225], # Imagenet标准差 'num_trainval': -1, 'num_train': -1, 'num_val': -1, 'num_test': -1, 'class_dim': -1, 'label_dict': {}, 'total_epoch': 30, # 总迭代次数, 代码调试好后考虑 'batch_size': 64, # 设置每个批次的数据大小,同时对训练提供器和测试 'log_interval': 1, # 设置训练过程中,每隔多少个batch显示一次 'eval_interval': 1, # 设置每个多少个epoch测试一次 'dataset_root_path': 'D:\\Workspace\\ExpDatasets\\', 'result_root_path': 'D:\\Workspace\\ExpResults\\', 'deployment_root_path': 'D:\\Workspace\\ExpDeployments\\', 'useGPU': True, # True | Flase 'learning_strategy': { # 学习率和优化器相关参数 'optimizer_strategy': 'Momentum', # 优化器:Momentum, RMS, SGD, Adam 'learning_rate_strategy': 'CosineAnnealingDecay', # 学习率策略: 固定fixed, 分段衰减PiecewiseDecay, 余弦退火CosineAnnealingDecay, 指数ExponentialDecay, 多项式PolynomialDecay 'learning_rate': 0.001, # 固定学习率 'momentum': 0.9, # 动量 'Piecewise_boundaries': [60, 80, 90], # 分段衰减:变换边界,每当运行到epoch时调整一次 'Piecewise_values': [0.01, 0.001, 0.0001, 0.00001], # 分段衰减:步进学习率,每次调节的具体值 'Exponential_gamma': 0.9, # 指数衰减:衰减指数 'Polynomial_decay_steps': 10, # 多项式衰减:衰减周期,每个多少个epoch衰减一次 'verbose': True }, 'augmentation_strategy': { 'withAugmentation': True, # 数据扩展相关参数 'augmentation_prob': 0.5, # 设置数据增广的概率 'rotate_angle': 15, # 随机旋转的角度 'Hflip_prob': 0.5, # 随机翻转的概率 'brightness': 0.4, 'contrast': 0.4, 'saturation': 0.4, 'hue': 0.4, }, } #### 2. 设置简化参数名 args = train_parameters argsAS = args['augmentation_strategy'] argsLS = train_parameters['learning_strategy'] model_name = args['dataset_name'] + '_' + args['architecture'] #### 3. 定义设备工作模式 [GPU|CPU] # 定义使用CPU还是GPU,使用CPU时use_cuda = False,使用GPU时use_cuda = True def init_device(useGPU=args['useGPU']): paddle.device.set_device('gpu:0') if useGPU else paddle.device.set_device('cpu') init_device() #### 4.定义各种路径:模型、训练、日志结果图 # Q2-2: 补全训练结果保存路径和部署路径 # [Your codes 5] # 4.1 数据集路径 dataset_root_path = os.path.join(args['dataset_root_path'], args['dataset_name']) json_dataset_info = os.path.join(dataset_root_path, 'dataset_info.json') # 4.2 训练过程涉及的相关路径 result_root_path = os.path.join(args['result_root_path'], args['project_name']) checkpoint_models_path = os.path.join(result_root_path, 'checkpoint_models') # 迭代训练模型保存路径 final_figures_path = os.path.join(result_root_path, 'final_figures') # 训练过程曲线图 final_models_path = os.path.join(result_root_path, 'final_models') # 最终用于部署和推理的模型 logs_path = os.path.join(result_root_path, 'logs') # 训练过程日志 # 4.3 checkpoint_ 路径用于定义恢复训练所用的模型保存 # checkpoint_path = os.path.join(result_path, model_name + '_final') # checkpoint_model = os.path.join(args['result_root_path'], model_name + '_' + args['checkpoint_time'], 'checkpoint_models', args['checkpoint_model']) # 4.4 验证和测试时的相关路径(文件) deployment_root_path = os.path.join(args['deployment_root_path'], args['project_name']) deployment_checkpoint_path = os.path.join(deployment_root_path, 'checkpoint_models', model_name + '_final') deployment_final_models_path = os.path.join(deployment_root_path, 'final_models', model_name + '_final') deployment_final_figure_path = os.path.join(deployment_root_path, 'final_figures') deployment_logs_path = os.path.join(deployment_root_path, 'logs') # 4.5 初始化结果目录 def init_result_path(): if not os.path.exists(final_models_path): os.makedirs(final_models_path) if not os.path.exists(final_figures_path): os.makedirs(final_figures_path) if not os.path.exists(logs_path): os.makedirs(logs_path) if not os.path.exists(checkpoint_models_path): os.makedirs(checkpoint_models_path) init_result_path() #### 5. 初始化参数 def init_train_parameters(): dataset_info = json.loads(open(json_dataset_info, 'r', encoding='utf-8').read()) train_parameters['num_trainval'] = dataset_info['num_trainval'] train_parameters['num_train'] = dataset_info['num_train'] train_parameters['num_val'] = dataset_info['num_val'] train_parameters['num_test'] = dataset_info['num_test'] train_parameters['class_dim'] = dataset_info['class_dim'] train_parameters['label_dict'] = dataset_info['label_dict'] init_train_parameters() #### 6. 初始化日志方法 logger = init_log_config(logs_path=logs_path, model_name=model_name) # 输出训练参数 train_parameters # if __name__ == '__main__': # pprint(args)

2.2 数据集定义及数据预处理

在Paddle 2.0+ 中,我们可使用paddle.io来构造标准的数据集类,用于通过数据列表读取样本,并对样本进行预处理。全新的paddle.vision.transforms可以轻松的实现样本的多种预处理功能,而不用手动去写数据预处理的函数,这大简化了代码的复杂性。

代码最后给出了该了简单测试,输出两种不同模式的样本,进行数据预处理和不进行数据预处理。

Q3:完成数据集类定义的部分代码(10分) ([Your codes 4~7])

要求:

- 读取数据列表文件,并将每一行都按照路径和标签进行拆分成两个字段的序列,并将序列依次保存至data序列中;

- 数据增广要求按照全局参数中指定的概率进行,包括随机裁剪、水平翻转、随机2D旋转、色彩扰动(亮度、对比度、饱和度调节);

- 所有样本都要求以规范的input_size(227×227)的尺度进行输入,并且需要进行均值和方差归一化,同时需要转换为Paddle要求的Tensor形态;

- 编写

__getitem__和__len__函数,实现数据的读取及数据集长度的获取

import os import sys import cv2 import numpy as np import paddle import paddle.vision.transforms as T from paddle.io import DataLoader input_size = (args['input_size'][1], args['input_size'][2]) # 1. 数据集的定义 class ButterflyDataset(paddle.io.Dataset): def __init__(self, dataset_root_path, mode='test', withAugmentation=argsAS['withAugmentation']): assert mode in ['train', 'val', 'test', 'trainval'] self.data = [] # 定义数据序列 self.withAugmentation = withAugmentation # Q3-1: 读取数据列表文件,并将每一行都按照路径和标签进行拆分成两个字段的序列,并将序列依次保存至data序列中 # [Your codes 6] with open(os.path.join(dataset_root_path, mode+'.txt')) as f: for line in f.readlines(): info = line.strip().split('\t') image_path = os.path.join(dataset_root_path, 'Data', info[0].strip()) if len(info) == 2: self.data.append([image_path, info[1].strip()]) elif len(info) == 1: self.data.append([image_path, -1]) # Q3-2:按照一定的随机增广概率(在Config中指定)进行数据增广 # [Your codes 7] prob = np.random.random() if mode in ['train', 'trainval'] and prob >= argsAS['augmentation_prob']: self.transforms = T.Compose([ T.RandomResizedCrop(input_size), T.RandomHorizontalFlip(argsAS['Hflip_prob']), T.RandomRotation(argsAS['rotate_angle']), T.ColorJitter(brightness=argsAS['brightness'], contrast=argsAS['contrast'], saturation=argsAS['saturation'], hue=argsAS['hue']), T.ToTensor(), T.Normalize(mean=args['mean_value'], std=args['std_value']) ]) elif mode in ['val', 'test'] or prob < argsAS['augmentation_prob']: self.transforms = T.Compose([ T.Resize(input_size), T.ToTensor(), T.Normalize(mean=args['mean_value'], std=args['std_value']) ]) # Q3-3: 补全下列函数,实现对单个样本的读取和初始化 # [Your codes 8] def __getitem__(self, index): image_path, label = self.data[index] image = cv2.imread(image_path, 1) # 使用cv2进行数据读取可以强制将的图像转化为彩色模式,其中0为灰度模式,1为彩色模式 if self.withAugmentation == True: image = self.transforms(image) label = np.array(label, dtype='int64') return image, label # Q3-4: 补全下列函数,实现样本总数的获取 # [Your codes 9] def __len__(self): return len(self.data) ############################################################### # 测试输入数据类:分别输出进行预处理和未进行预处理的数据形态和例图 if __name__ == "__main__": import random # 1. 载入数据 dataset_val_withoutAugmentation = ButterflyDataset(dataset_root_path, mode='val', withAugmentation=False) id = random.randrange(0, len(dataset_val_withoutAugmentation)) img1 = dataset_val_withoutAugmentation[id][0] dataset_val_withAugmentation = ButterflyDataset(dataset_root_path, mode='val') img2 = dataset_val_withAugmentation[id][0] print('第{}个验证集样本,\n 数据预处理前的形态为:{},\n 数据预处理后的数据形态为: {}'.format(id, img1.shape, img2.shape))

第56个验证集样本,

数据预处理前的形态为:(400, 500, 3),

数据预处理后的数据形态为: [3, 227, 3]

2.3 设置数据提供器

结合paddle.io.DataLoader工具包,可以将读入的数据进行batch划分,确定是否进行随机打乱和是否丢弃最后的冗余样本。

- 一般来说,对于训练样本(包括train和trainvl),我们需要进行随机打乱,让每次输入到网络中的样本处于不同的组合形式,防止过拟合的产生;对于验证数据和测试数据,由于每次测试都需要对所有样本进行一次完整的遍历,并计算最终的平均值,因此是否进行打乱,并不影响最终的结果。

- 由于在最终输出的loss和accuracy的平均值时,会事先进行一次批内的平均,因此如果最后一个batch的数据并不能构成完整的一批,即实际样本数量小于batch_size,会导致最终计算精度产生一定的偏差。所以,当样本数较多的时候,可以考虑在训练时丢弃最后一个batch的数据。但值得注意的是,验证集和测试集不能进行丢弃,否则会有一部分样本无法进行测试。

Q4:定义数据迭代提供器(10分) ([Your codes 10])

要求:

- 同时获取四个子集的迭代读取器

- 根据每个子集的性质,设置参数

随机打乱shuffle,末尾丢弃 drop_last

import os import sys from paddle.io import DataLoader # Q4-1: 从四个数据自己中获取数据 # [Your codes 10] dataset_trainval = ButterflyDataset(dataset_root_path, mode='trainval') dataset_train = ButterflyDataset(dataset_root_path, mode='train') dataset_val = ButterflyDataset(dataset_root_path, mode='val') dataset_test = ButterflyDataset(dataset_root_path, mode='test') # Q4-2:创建读取迭代器 # [Your codes 11] trainval_reader = DataLoader(dataset_trainval, batch_size=args['batch_size'], shuffle=True, drop_last=True) train_reader = DataLoader(dataset_train, batch_size=args['batch_size'], shuffle=True, drop_last=True) val_reader = DataLoader(dataset_val, batch_size=args['batch_size'], shuffle=False, drop_last=False) test_reader = DataLoader(dataset_test, batch_size=args['batch_size'], shuffle=False, drop_last=False) ###################################################################################### # 测试读取器 if __name__ == "__main__": for i, (image, label) in enumerate(val_reader()): if i == 2: break print('验证集batch_{}的图像形态:{}, 标签形态:{}'.format(i, image.shape, label.shape))

验证集batch_0的图像形态:[64, 3, 227, 227], 标签形态:[64]

验证集batch_1的图像形态:[3, 3, 227, 227], 标签形态:[3]

2.4 定义过程可视化函数 (无需事先执行)

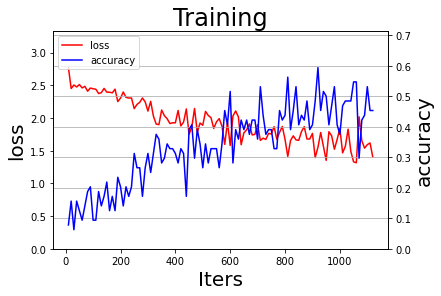

定义训练过程中用到的可视化方法, 包括训练损失, 训练集批准确率, 测试集损失,测试机准确率等。 根据具体的需求,可以在训练后展示这些数据和迭代次数的关系. 值得注意的是, 训练过程中可以每个epoch绘制一个数据点,也可以每个batch绘制一个数据点,也可以每个n个batch或n个epoch绘制一个数据点。可视化代码除了实现训练后自动可视化的函数,同时实现将可视化的图片和数据进行保存,保存的文件夹由 final_figures_path 指定。

在本例中,为了简化代码,同时尽量使得代码具有通用性,我们将可视化代码用 .py 文件进行保存。然后采用自定义函数方式进行载入。使用方法如下所示

# 函数载入 sys.path.append(r'D:\WorkSpace\DeepLearning\WebsiteV2\Notebook\Projects') # 定义模块保存位置 from utils.getVisualization import draw_process # 导入日志模块

# 测试可视化函数 if __name__ == '__main__': try: train_log = np.load(os.path.join(final_figures_path, 'train.npy')) print('训练数据可视化结果:') draw_process('Training', 'loss', 'accuracy', iters=train_log[0], losses=train_log[1], accuracies=train_log[2], final_figures_path=final_figures_path, figurename='train', isShow=True) except: print('以下图例为测试数据。') draw_process('Training', 'loss', 'accuracy', figurename='default', isShow=True)

以下图例为测试数据。

2.5 定义日志输出函数 (无需事先执行)

logging是一个专业的日志输出库,可以用于在屏幕上打印运行结果(和print()函数一致),也可以实现将这些运行结果保存到指定的文件夹中,用于后续的研究。

在本例中,为了简化代码,同时尽量使得代码具有通用性,我们将日志输出函数代码用 .py 文件进行保存。然后采用自定义函数方式进行载入。使用方法如下所示:

# 函数载入 sys.path.append(r'D:\WorkSpace\DeepLearning\WebsiteV2\Notebook\Projects') # 定义模块保存位置 from utils.getLogging import init_log_config logger = init_log_config(logs_path=logs_path, model_name=model_name)

源代码:getLogging.py

# 测试日志输出 if __name__ == "__main__": logger.info('测试一下, 模型名称: {}'.format(model_name)) system_info = json.dumps(getSystemInfo(), indent=4, ensure_ascii=False, sort_keys=False, separators=(',', ':')) logger.info('系统基本信息:') logger.info(system_info)

2022-10-11 14:27:02,650 - INFO: 测试一下, 模型名称: Butterfly_Alexnet

2022-10-11 14:27:03,732 - INFO: 系统基本信息:

2022-10-11 14:27:03,733 - INFO: {

"操作系统":"Windows-10-10.0.22000-SP0",

"CPU":"Intel(R) Core(TM) i7-9700 CPU @ 3.00GHz",

"内存":"7.32G/15.88G (46.10%)",

"GPU":"b'GeForce RTX 2080' 1.13G/8.00G (0.14%)",

"CUDA":"7.6.5",

"cuDNN":"7.6.5",

"Paddle":"2.3.2"

}

【实验三】 模型训练与评估

实验摘要: 蝴蝶种类识别是一个多分类问题,我们通过卷积神经网络来完成。这部分通过PaddlePaddle手动构造一个Alexnet卷积神经的网络来实现识别功能,最后一层采用Softmax激活函数完成分类任务。

实验目的:

- 掌握卷积神经网络的构建和基本原理

- 深刻理解训练集、验证集、训练验证集及测试集在模型训练中的作用

- 学会按照网络拓扑结构图定义神经网络类 (Paddle 2.0+)

- 学会在线测试和离线测试两种测试方法

- 学会定义多种优化方法,并在全局参数中进行定义选择

3.1 配置网络

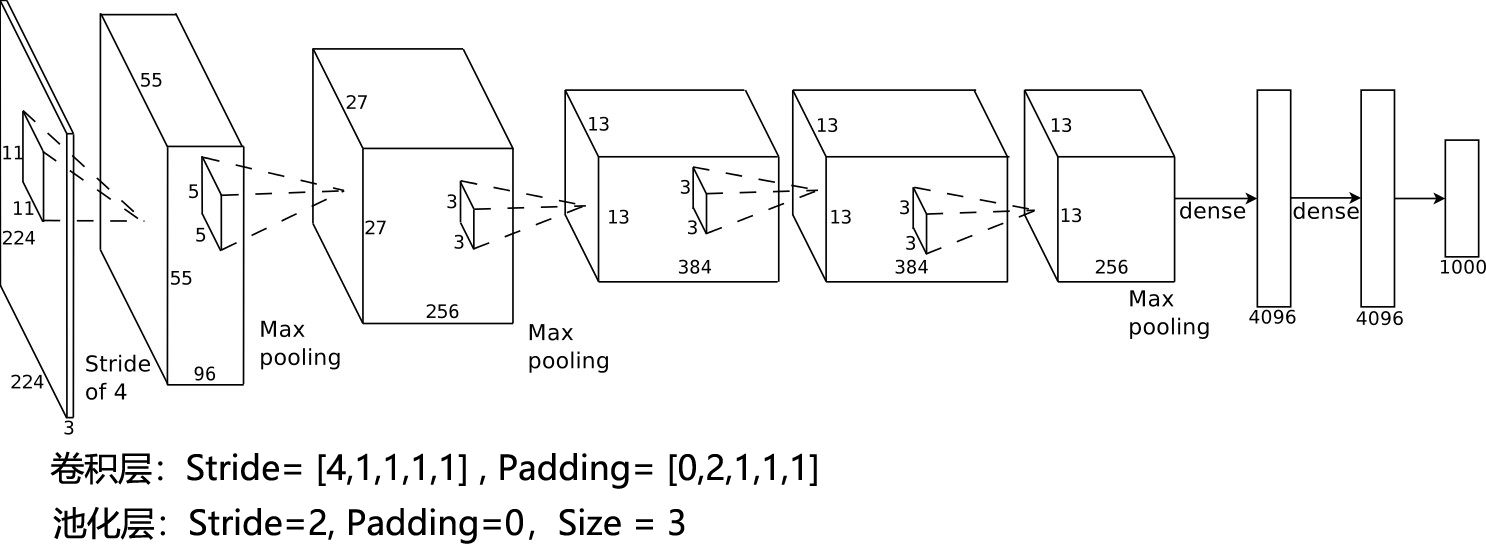

3.1.1 网络拓扑结构图

需要注意的是,在Alexnet中实际输入的尺度会被Crop为

3.1.2 网络参数配置表

Q5: 根据拓扑结构图补全网络参数配置表(10分)

| Layer | Input | Kernels_num | Kernels_size | Stride | Padding | PoolingType | Output | Parameters |

|---|---|---|---|---|---|---|---|---|

| Input | 3×227×227 | |||||||

| Conv1 | 3×227×227 | 96 | 3×11×11 | 4 | 0 | 96×55×55 | (3×11×11+1)×96=34944 | |

| Pool1 | 96×55×55 | 96 | 96×3×3 | 2 | 0 | max | 96×27×27 | 0 |

| Conv2 | 96×27×27 | 256 | 96×5×5 | 1 | 2 | 256×27×27 | (96×5×5+1)×256=614656 | |

| Pool2 | 256×27×27 | 256 | 256×3×3 | 2 | 0 | max | 256×13×13 | 0 |

| Conv3 | 256×13×13 | 384 | 256×3×3 | 1 | 1 | 384×13×13 | (256×3×3+1)×384=885120 | |

| Conv4 | 384×13×13 | 384 | 384×3×3 | 1 | 1 | 384×13×13 | (384×3×3+1)×384=1327488 | |

| Conv5 | 384×13×13 | 256 | 384×3×3 | 1 | 1 | 256×13×13 | (384×3×3+1)×256=884992 | |

| Pool5 | 256×13×13 | 256 | 256×3×3 | 2 | 0 | max | 256×6×6 | 0 |

| FC6 | (256×6×6)×1 | 4096×1 | (9216+1)×4096=37752832 | |||||

| FC7 | 4096×1 | 4096×1 | (4096+1)×4096=16781312 | |||||

| FC8 | 4096×1 | 1000×1 | (4096+1)×1000=4097000 | |||||

| Output | 1000×1 | |||||||

| Total = 62378344 |

其中卷积层参数:3747200,占总参数的6%。

3.1.3 定义神经网络类

从Alexnet开始,包括VGG,GoogLeNet,Resnet等模型都是层次较深的模型,如果按照逐层的方式进行设计,代码会变得非常繁琐。因此,我们可以考虑将相同结构的模型进行汇总和合成,例如Alexnet中,卷积层+激活+池化层就是一个完整的结构体。

在本例中,为了简化代码,同时尽量使得代码具有通用性,我们将定义优化方法和学习率函数代码用 .py 文件进行保存。然后采用自定义函数方式进行载入。使用方法如下所示:

# 函数载入 sys.path.append(r'D:\WorkSpace\DeepLearning\WebsiteV2\Notebook\Projects') # 定义模块保存位置 from utils.models.AlexNet import AlexNet

源代码:AlexNet.py

Q6:根据网络拓扑结构图和网络参数配置表完成AlexNet模型类的定义(10分)([Your codes 12~14])

import paddle import paddle.nn as nn class AlexNet(nn.Layer): def __init__(self, num_classes=1000): super(AlexNet, self).__init__() self.num_classes = num_classes # Q6-1: 根据Cifar10拓扑结构图和网络参数配置表完成下列Cifar10模型的类定义 # 各层超参数定义: [Your codes 12] self.features = nn.Sequential( nn.Conv2D(in_channels=3, out_channels=96, kernel_size=11, stride=4, padding=0), nn.ReLU(), nn.MaxPool2D(kernel_size=3, stride=2), nn.Conv2D(in_channels=96, out_channels=256, kernel_size=5, stride=1, padding=2), nn.ReLU(), nn.MaxPool2D(kernel_size=3, stride=2), nn.Conv2D(in_channels=256, out_channels=384, kernel_size=3, stride=1, padding=1), nn.ReLU(), nn.Conv2D(in_channels=384, out_channels=384, kernel_size=3, stride=1, padding=1), nn.ReLU(), nn.Conv2D(in_channels=384, out_channels=256, kernel_size=3, stride=1, padding=1), nn.ReLU(), nn.MaxPool2D(kernel_size=3, stride=2), ) self.fc = nn.Sequential( nn.Linear(in_features=256*6*6, out_features=4096), nn.ReLU(), nn.Dropout(), nn.Linear(in_features=4096, out_features=4096), nn.ReLU(), nn.Dropout(), nn.Linear(in_features=4096, out_features=num_classes), ) # Q6-2: 根据Cifar10拓扑结构图和网络参数配置表完成下列Cifar10模型的类定义 # 定义前向传输过程: [Your codes 13] def forward(self, inputs): x = self.features(inputs) x = paddle.flatten(x, 1) x = self.fc(x) return x #### 网络测试 if __name__ == '__main__': # Q6-3:完成模型测试代码的定义 # [Your codes 14] network = AlexNet() paddle.summary(network, (1,3,227,227))

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-91 [[1, 3, 227, 227]] [1, 96, 55, 55] 34,944

ReLU-36 [[1, 96, 55, 55]] [1, 96, 55, 55] 0

MaxPool2D-16 [[1, 96, 55, 55]] [1, 96, 27, 27] 0

Conv2D-92 [[1, 96, 27, 27]] [1, 256, 27, 27] 614,656

ReLU-37 [[1, 256, 27, 27]] [1, 256, 27, 27] 0

MaxPool2D-17 [[1, 256, 27, 27]] [1, 256, 13, 13] 0

Conv2D-93 [[1, 256, 13, 13]] [1, 384, 13, 13] 885,120

ReLU-38 [[1, 384, 13, 13]] [1, 384, 13, 13] 0

Conv2D-94 [[1, 384, 13, 13]] [1, 384, 13, 13] 1,327,488

ReLU-39 [[1, 384, 13, 13]] [1, 384, 13, 13] 0

Conv2D-95 [[1, 384, 13, 13]] [1, 256, 13, 13] 884,992

ReLU-40 [[1, 256, 13, 13]] [1, 256, 13, 13] 0

MaxPool2D-18 [[1, 256, 13, 13]] [1, 256, 6, 6] 0

Linear-56 [[1, 9216]] [1, 4096] 37,752,832

ReLU-41 [[1, 4096]] [1, 4096] 0

Dropout-37 [[1, 4096]] [1, 4096] 0

Linear-57 [[1, 4096]] [1, 4096] 16,781,312

ReLU-42 [[1, 4096]] [1, 4096] 0

Dropout-38 [[1, 4096]] [1, 4096] 0

Linear-58 [[1, 4096]] [1, 1000] 4,097,000

===========================================================================

Total params: 62,378,344

Trainable params: 62,378,344

Non-trainable params: 0

---------------------------------------------------------------------------

Input size (MB): 0.59

Forward/backward pass size (MB): 11.05

Params size (MB): 237.95

Estimated Total Size (MB): 249.59

---------------------------------------------------------------------------

3.2 定义优化方法 (无需事先执行)

学习率策略和优化方法是两个密不可分的模块,学习率策略定义了学习率的变化方法,常见的包括固定学习率、分段学习率、余弦退火学习率、指数学习率和多项式学习率等;优化策略是如何进行学习率的学习和变化,常见的包括动量SGD、RMS、SGD和Adam等。

在本例中,为了简化代码,同时尽量使得代码具有通用性,我们将定义优化方法和学习率函数代码用 .py 文件进行保存。然后采用自定义函数方式进行载入。使用方法如下所示:

# 函数载入 sys.path.append(r'D:\WorkSpace\DeepLearning\WebsiteV2\Notebook\Projects') # 定义模块保存位置 from utils.getOptimizer import learning_rate_setting, optimizer_setting

源代码:getOptimizer.py

if __name__ == '__main__': # print('当前学习率策略为: {} + {}'.format(argsLS['optimizer_strategy'], argsLS['learning_rate_strategy'])) linear = paddle.nn.Linear(10, 10) lr = learning_rate_setting(args=args, argsO=argsLS) optimizer = optimizer_setting(linear, lr, argsO=argsLS) if argsLS['optimizer_strategy'] == 'fixed': print('learning = {}'.format(argsLS['learning_rate'])) else: for epoch in range(10): for batch_id in range(10): x = paddle.uniform([10, 10]) out = linear(x) loss = paddle.mean(out) loss.backward() optimizer.step() optimizer.clear_gradients() # lr.step() # 按照batch进行学习率更新 lr.step() # 按照epoch进行学习率更新

当前学习率策略为: Momentum + CosineAnnealingDecay

Epoch 0: CosineAnnealingDecay set learning rate to 0.001.

Epoch 1: CosineAnnealingDecay set learning rate to 0.0009996954135095479.

Epoch 2: CosineAnnealingDecay set learning rate to 0.000998782025129912.

Epoch 3: CosineAnnealingDecay set learning rate to 0.0009972609476841365.

Epoch 4: CosineAnnealingDecay set learning rate to 0.000995134034370785.

Epoch 5: CosineAnnealingDecay set learning rate to 0.0009924038765061038.

Epoch 6: CosineAnnealingDecay set learning rate to 0.0009890738003669026.

Epoch 7: CosineAnnealingDecay set learning rate to 0.000985147863137998.

Epoch 8: CosineAnnealingDecay set learning rate to 0.0009806308479691592.

Epoch 9: CosineAnnealingDecay set learning rate to 0.0009755282581475766.

Epoch 10: CosineAnnealingDecay set learning rate to 0.000969846310392954.

3.3 定义验证函数

验证函数有两个功能,一是在训练过程中实时地对验证集进行测试(在线测试),二是在训练结束后对测试集进行测试(离线测试)。

验证函数的具体流程包括:

- 初始化输出变量,包括top1精度,top5精度和损失

- 基于批次batch的结构进行循环测试,具体包括:

1). 定义输入层(image,label),图像输入维度 [batch, channel, Width, Height] (-1,imgChannel,imgSize,imgSize),标签输入维度 [batch, 1] (-1,1)

2). 定义输出层:在paddle2.0+中,我们使用model.eval_batch([image],[label])进行评估验证,该函数可以直接输出精度和损失,但在运行前需要使用model.prepare()进行配置。值得注意的,在计算测试集精度的时候,需要对每个批次的精度/损失求取平均值。

在定义eval()函数的时候,我们需要为其指定两个必要参数:model是测试的模型,data_reader是迭代的数据读取器,取值为val_reader(), test_reader(),分别对验证集和测试集。此处验证集和测试集数据的测试过程是相同的,只是所使用的数据不同;此外,可选参数verbose用于定义是否在测试的时候输出过程

Q7:补全下列验证代码,实现对验证集的评估,并输出损失值,top1精度和top5精度(10分)([Your codes 15])

# 载入项目文件夹 import sys import numpy as np import paddle import paddle.nn.functional as F from paddle.static import InputSpec __all__ = ['eval'] # Q7:定义验证函数,实现对验证数据的评估 # [Your codes 15] def eval(model, data_reader, verbose=0): accuracies_top1 = [] accuracies_top5 = [] losses = [] n_total = 0 for batch_id, (image, label) in enumerate(data_reader): n_batch = len(label) n_total = n_total + n_batch label = paddle.unsqueeze(label, axis=1) loss, acc = model.eval_batch([image], [label]) losses.append(loss[0]) accuracies_top1.append(acc[0][0]*n_batch) accuracies_top5.append(acc[0][1]*n_batch) if verbose == 1: print('\r Batch:{}/{}, acc_top1:[{:.5f}], acc_top5:[{:.5f}]'.format(batch_id+1, len(data_reader), acc[0][0], acc[0][1]), end='') avg_loss = np.sum(losses)/n_total # loss 记录的是当前batch的累积值 avg_acc_top1 = np.sum(accuracies_top1)/n_total # metric 是当前batch的平均值 avg_acc_top5 = np.sum(accuracies_top5)/n_total return avg_loss, avg_acc_top1, avg_acc_top5 ############################################################## if __name__ == '__main__': try: # 设置输入样本的维度 input_spec = InputSpec(shape=[None] + args['input_size'], dtype='float32', name='image') label_spec = InputSpec(shape=[None, 1], dtype='int64', name='label') # 载入模型 network = AlexNet(num_classes=args['class_dim']) model = paddle.Model(network, input_spec, label_spec) # 模型实例化 model.load(deployment_checkpoint_path) # 载入调优模型的参数 model.prepare(loss=paddle.nn.CrossEntropyLoss(), # 设置loss metrics=paddle.metric.Accuracy(topk=(1,5))) # 设置评价指标 # 执行评估函数,并输出验证集样本的损失和精度 print('开始评估...') avg_loss, avg_acc_top1, avg_acc_top5 = eval(model, val_reader(), verbose=1) print('\r [验证集] 损失: {:.5f}, top1精度:{:.5f}, top5精度为:{:.5f} \n'.format(avg_loss, avg_acc_top1, avg_acc_top5), end='') avg_loss, avg_acc_top1, avg_acc_top5 = eval(model, test_reader(), verbose=1) print('\r [测试集] 损失: {:.5f}, top1精度:{:.5f}, top5精度为:{:.5f}'.format(avg_loss, avg_acc_top1, avg_acc_top5), end='') except: print('数据不存在跳过测试')

开始评估...

[验证集] 损失: 0.04095, top1精度:0.53731, top5精度为:0.89552

[测试集] 损失: 0.03296, top1精度:0.44961, top5精度为:0.89922

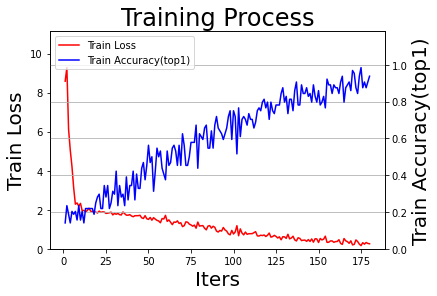

3.4 模型训练及在线测试

在Paddle 2.0+动态图模式下,动态图模式被作为默认进程,同时动态图守护进程 fluid.dygraph.guard(PLACE) 被取消。

训练部分的具体流程包括:

- 定义输入层(image, label): 图像输入维度 [batch, channel, Width, Height] (-1,imgChannel,imgSize,imgSize),标签输入维度 [batch, 1] (-1,1)

- 实例化网络模型: model = Alexnet()

- 定义学习率策略和优化算法

- 定义输出层,即模型准备函数model.prepare()

- 基于"周期-批次"两层循环进行训练

- 记录训练过程的结果,并定期输出模型。此处,我们分别保存用于调优和恢复训练的checkpoint_model模型和用于部署与预测的final_model模型

Q8:完成下列模型训练代码(20分) ([Your codes 16~18])

要求:

- 补全训练部分代码,要求实现在一个周期内使用mini-batch方式进行训练,同时将每个batch的当前batch轮次,平均损失和top1精度、top5精度保存到可视化序列中,用于可视化训练过程

- 补全验证集测评代码,并输出到可视化序列

- 补全模型显示及保存代码,实现将最优的模型分别保存为调优模型及部署模型

- 补全主函数中的模型配置代码

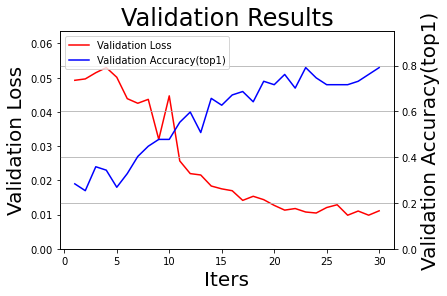

import os import time import json import paddle from paddle.static import InputSpec # 初始配置变量 total_epoch = train_parameters['total_epoch'] # 初始化绘图列表 all_train_iters = [] all_train_losses = [] all_train_accs_top1 = [] all_train_accs_top5 = [] all_test_losses = [] all_test_iters = [] all_test_accs_top1 = [] all_test_accs_top5 = [] def train(model): # 初始化临时变量 num_batch = 0 best_result = 0 best_result_id = 0 elapsed = 0 for epoch in range(1, total_epoch+1): # Q8-1:补全下列训练代码,实现在一个周期内使用mini-batch方式进行训练 # [Your codes 16] for batch_id, (image, label) in enumerate(train_reader()): num_batch += 1 label = paddle.unsqueeze(label, axis=1) loss, acc = model.train_batch([image], [label]) if num_batch % train_parameters['log_interval'] == 0: # 每10个batch显示一次日志,适合大数据集 avg_loss = loss[0][0] acc_top1 = acc[0][0] acc_top5 = acc[0][1] elapsed_step = time.perf_counter() - elapsed - start elapsed = time.perf_counter() - start logger.info('Epoch:{}/{}, batch:{}, train_loss:[{:.5f}], acc_top1:[{:.5f}], acc_top5:[{:.5f}]({:.2f}s)' .format(epoch, total_epoch, num_batch, loss[0][0], acc[0][0], acc[0][1], elapsed_step)) # 记录训练过程,用于可视化训练过程中的loss和accuracy all_train_iters.append(num_batch) all_train_losses.append(avg_loss) all_train_accs_top1.append(acc_top1) all_train_accs_top5.append(acc_top5) # 每隔一定周期进行一次测试 if epoch % train_parameters['eval_interval'] == 0 or epoch == total_epoch: # Q8-2:补全下列验证集测评代码,并输出到可视化序列 # [Your codes 17] # 模型校验 avg_loss, avg_acc_top1, avg_acc_top5 = eval(model, val_reader()) logger.info('[validation] Epoch:{}/{}, val_loss:[{:.5f}], val_top1:[{:.5f}], val_top5:[{:.5f}]'.format(epoch, total_epoch, avg_loss, avg_acc_top1, avg_acc_top5)) # 记录测试过程,用于可视化训练过程中的loss和accuracy all_test_iters.append(epoch) all_test_losses.append(avg_loss) all_test_accs_top1.append(avg_acc_top1) all_test_accs_top5.append(avg_acc_top5) # Q8-3:补全下列模型显示及保存代码,实现将最优的模型分别保存为调优模型及部署模型 # [Your codes 18] # 将性能最好的模型保存为final模型 if avg_acc_top1 > best_result: best_result = avg_acc_top1 best_result_id = epoch # finetune model 用于调优和恢复训练 model.save(os.path.join(checkpoint_models_path, model_name + '_final')) # inference model 用于部署和预测 model.save(os.path.join(final_models_path, model_name + '_final'), training=False) logger.info('已保存当前测试模型(epoch={})为最优模型:{}_final'.format(best_result_id, model_name)) logger.info('最优top1测试精度:{:.5f} (epoch={})'.format(best_result, best_result_id)) logger.info('训练完成,最终性能accuracy={:.5f}(epoch={}), 总耗时{:.2f}s, 已将其保存为:{}_final'.format(best_result, best_result_id, time.perf_counter() - start, model_name)) #### 训练主函数 ########################################################3 if __name__ == '__main__': system_info = json.dumps(getSystemInfo(), indent=4, ensure_ascii=False, sort_keys=False, separators=(',', ':')) logger.info('系统基本信息') logger.info(system_info) # 将此次训练的超参数进行保存 data = json.dumps(train_parameters, indent=4, ensure_ascii=False, sort_keys=False, separators=(',', ':')) # 格式化字典格式的参数列表 logger.info(data) # 启动训练过程 logger.info('训练参数保存完毕,使用{}模型, 训练{}数据, 训练集{}, 启动训练...'.format(train_parameters['architecture'],train_parameters['dataset_name'],train_parameters['training_data'])) logger.info('当前模型目录为:{}'.format(model_name + '_' + train_parameters['starting_time'])) # Q8-4:补全下列模型配置代码 # [Your codes 19] # 设置输入样本的维度 input_spec = InputSpec(shape=[None] + train_parameters['input_size'], dtype='float32', name='image') label_spec = InputSpec(shape=[None, 1], dtype='int64', name='label') # 初始化AlexNet,并进行实例化 network = AlexNet(num_classes=args['class_dim']) model = paddle.Model(network, input_spec, label_spec) logger.info('模型参数信息:') logger.info(model.summary()) # 是否显示神经网络的具体信息 # 设置学习率、优化器、损失函数和评价指标 lr = learning_rate_setting(args=args, argsO=argsLS) optimizer = optimizer_setting(model, lr, argsO=argsLS) model.prepare(optimizer, paddle.nn.CrossEntropyLoss(), paddle.metric.Accuracy(topk=(1,5))) # 启动训练过程 start = time.perf_counter() train(model) logger.info('训练完毕,结果路径{}.'.format(result_root_path)) # 输出训练过程图 logger.info('Done.') draw_process("Training Process", 'Train Loss', 'Train Accuracy(top1)', all_train_iters, all_train_losses, all_train_accs_top1, final_figures_path=final_figures_path, figurename='train', isShow=True) draw_process("Validation Results", 'Validation Loss', 'Validation Accuracy(top1)', all_test_iters, all_test_losses, all_test_accs_top1, final_figures_path=final_figures_path, figurename='val', isShow=True) # 参考结果 ############################################################## # INFO 2022-10-10 20:26:32,960 3402686128.py:76] 训练完成,最终性能accuracy=0.53731(epoch=25), 总耗时224.63s, 已将其保存为:Butterfly_Alexnet_final # INFO 2022-10-10 20:26:32,961 3402686128.py:112] 训练完毕,结果路径D:\Workspace\ExpResults\Project011AlexNetButterfly.

2022-10-11 14:27:09,969 - INFO: 系统基本信息

2022-10-11 14:27:09,970 - INFO: {

"操作系统":"Windows-10-10.0.22000-SP0",

"CPU":"Intel(R) Core(TM) i7-9700 CPU @ 3.00GHz",

"内存":"8.30G/15.88G (52.20%)",

"GPU":"b'GeForce RTX 2080' 2.09G/8.00G (0.26%)",

"CUDA":"7.6.5",

"cuDNN":"7.6.5",

"Paddle":"2.3.2"

}

2022-10-11 14:27:09,971 - INFO: {

"project_name":"Project011AlexNetButterfly",

"dataset_name":"Butterfly",

"architecture":"Alexnet",

"training_data":"train",

"starting_time":"202210111427",

"input_size":[

3,

227,

227

],

"mean_value":[

0.485,

0.456,

0.406

],

"std_value":[

0.229,

0.224,

0.225

],

"num_trainval":490,

"num_train":423,

"num_val":67,

"num_test":129,

"class_dim":7,

"label_dict":{

"0":"admiral",

"1":"black_swallowtail",

"2":"machaon",

"3":"monarch_closed",

"4":"monarch_open",

"5":"peacock",

"6":"zebra"

},

"total_epoch":30,

"batch_size":64,

"log_interval":1,

"eval_interval":1,

"dataset_root_path":"D:\\Workspace\\ExpDatasets\\",

"result_root_path":"D:\\Workspace\\ExpResults\\",

"deployment_root_path":"D:\\Workspace\\ExpDeployments\\",

"useGPU":true,

"learning_strategy":{

"optimizer_strategy":"Momentum",

"learning_rate_strategy":"CosineAnnealingDecay",

"learning_rate":0.001,

"momentum":0.9,

"Piecewise_boundaries":[

60,

80,

90

],

"Piecewise_values":[

0.01,

0.001,

0.0001,

1e-05

],

"Exponential_gamma":0.9,

"Polynomial_decay_steps":10,

"verbose":true

},

"augmentation_strategy":{

"withAugmentation":true,

"augmentation_prob":0.5,

"rotate_angle":15,

"Hflip_prob":0.5,

"brightness":0.4,

"contrast":0.4,

"saturation":0.4,

"hue":0.4

}

}

2022-10-11 14:27:09,972 - INFO: 训练参数保存完毕,使用Alexnet模型, 训练Butterfly数据, 训练集train, 启动训练...

2022-10-11 14:27:09,973 - INFO: 当前模型目录为:Butterfly_Alexnet_202210111427

2022-10-11 14:27:09,978 - INFO: 模型参数信息:

2022-10-11 14:27:09,983 - INFO: {'total_params': 58310023, 'trainable_params': 58310023}

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-11 [[1, 3, 227, 227]] [1, 96, 55, 55] 34,944

ReLU-15 [[1, 96, 55, 55]] [1, 96, 55, 55] 0

MaxPool2D-7 [[1, 96, 55, 55]] [1, 96, 27, 27] 0

Conv2D-12 [[1, 96, 27, 27]] [1, 256, 27, 27] 614,656

ReLU-16 [[1, 256, 27, 27]] [1, 256, 27, 27] 0

MaxPool2D-8 [[1, 256, 27, 27]] [1, 256, 13, 13] 0

Conv2D-13 [[1, 256, 13, 13]] [1, 384, 13, 13] 885,120

ReLU-17 [[1, 384, 13, 13]] [1, 384, 13, 13] 0

Conv2D-14 [[1, 384, 13, 13]] [1, 384, 13, 13] 1,327,488

ReLU-18 [[1, 384, 13, 13]] [1, 384, 13, 13] 0

Conv2D-15 [[1, 384, 13, 13]] [1, 256, 13, 13] 884,992

ReLU-19 [[1, 256, 13, 13]] [1, 256, 13, 13] 0

MaxPool2D-9 [[1, 256, 13, 13]] [1, 256, 6, 6] 0

Linear-8 [[1, 9216]] [1, 4096] 37,752,832

ReLU-20 [[1, 4096]] [1, 4096] 0

Dropout-5 [[1, 4096]] [1, 4096] 0

Linear-9 [[1, 4096]] [1, 4096] 16,781,312

ReLU-21 [[1, 4096]] [1, 4096] 0

Dropout-6 [[1, 4096]] [1, 4096] 0

Linear-10 [[1, 4096]] [1, 7] 28,679

===========================================================================

Total params: 58,310,023

Trainable params: 58,310,023

Non-trainable params: 0

---------------------------------------------------------------------------

Input size (MB): 0.59

Forward/backward pass size (MB): 11.04

Params size (MB): 222.44

Estimated Total Size (MB): 234.07

---------------------------------------------------------------------------

当前学习率策略为: Momentum + CosineAnnealingDecay

Epoch 0: CosineAnnealingDecay set learning rate to 0.001.

2022-10-11 14:27:10,309 - INFO: Epoch:1/30, batch:1, train_loss:[8.58122], acc_top1:[0.14062], acc_top5:[0.67188](0.33s)

2022-10-11 14:27:10,548 - INFO: Epoch:1/30, batch:2, train_loss:[9.27832], acc_top1:[0.23438], acc_top5:[0.87500](0.24s)

2022-10-11 14:27:10,788 - INFO: Epoch:1/30, batch:3, train_loss:[6.12532], acc_top1:[0.18750], acc_top5:[0.75000](0.24s)

2022-10-11 14:27:11,061 - INFO: Epoch:1/30, batch:4, train_loss:[5.05255], acc_top1:[0.14062], acc_top5:[0.68750](0.27s)

2022-10-11 14:27:11,333 - INFO: Epoch:1/30, batch:5, train_loss:[4.21189], acc_top1:[0.20312], acc_top5:[0.71875](0.27s)

2022-10-11 14:27:11,566 - INFO: Epoch:1/30, batch:6, train_loss:[3.12470], acc_top1:[0.18750], acc_top5:[0.75000](0.23s)

2022-10-11 14:27:11,810 - INFO: [validation] Epoch:1/30, val_loss:[0.04924], val_top1:[0.28358], val_top5:[0.86567]

2022-10-11 14:27:21,377 - INFO: 已保存当前测试模型(epoch=1)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:27:21,379 - INFO: 最优top1测试精度:0.28358 (epoch=1)

2022-10-11 14:27:21,664 - INFO: Epoch:2/30, batch:7, train_loss:[2.28543], acc_top1:[0.20312], acc_top5:[0.82812](10.10s)

2022-10-11 14:27:21,907 - INFO: Epoch:2/30, batch:8, train_loss:[2.34809], acc_top1:[0.15625], acc_top5:[0.84375](0.24s)

2022-10-11 14:27:22,164 - INFO: Epoch:2/30, batch:9, train_loss:[2.18105], acc_top1:[0.23438], acc_top5:[0.75000](0.26s)

2022-10-11 14:27:22,416 - INFO: Epoch:2/30, batch:10, train_loss:[2.32676], acc_top1:[0.15625], acc_top5:[0.79688](0.25s)

2022-10-11 14:27:22,680 - INFO: Epoch:2/30, batch:11, train_loss:[1.95116], acc_top1:[0.20312], acc_top5:[0.84375](0.26s)

2022-10-11 14:27:22,919 - INFO: Epoch:2/30, batch:12, train_loss:[1.96834], acc_top1:[0.14062], acc_top5:[0.79688](0.24s)

2022-10-11 14:27:23,157 - INFO: [validation] Epoch:2/30, val_loss:[0.04965], val_top1:[0.25373], val_top5:[0.83582]

2022-10-11 14:27:23,158 - INFO: 最优top1测试精度:0.28358 (epoch=1)

2022-10-11 14:27:23,436 - INFO: Epoch:3/30, batch:13, train_loss:[1.88661], acc_top1:[0.21875], acc_top5:[0.85938](0.52s)

2022-10-11 14:27:23,678 - INFO: Epoch:3/30, batch:14, train_loss:[1.96422], acc_top1:[0.21875], acc_top5:[0.79688](0.24s)

2022-10-11 14:27:23,915 - INFO: Epoch:3/30, batch:15, train_loss:[2.07804], acc_top1:[0.21875], acc_top5:[0.75000](0.24s)

2022-10-11 14:27:24,162 - INFO: Epoch:3/30, batch:16, train_loss:[1.92753], acc_top1:[0.21875], acc_top5:[0.82812](0.25s)

2022-10-11 14:27:24,422 - INFO: Epoch:3/30, batch:17, train_loss:[1.90529], acc_top1:[0.21875], acc_top5:[0.79688](0.26s)

2022-10-11 14:27:24,650 - INFO: Epoch:3/30, batch:18, train_loss:[1.87884], acc_top1:[0.18750], acc_top5:[0.82812](0.23s)

2022-10-11 14:27:24,914 - INFO: [validation] Epoch:3/30, val_loss:[0.05146], val_top1:[0.35821], val_top5:[0.92537]

2022-10-11 14:27:33,349 - INFO: 已保存当前测试模型(epoch=3)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:27:33,349 - INFO: 最优top1测试精度:0.35821 (epoch=3)

2022-10-11 14:27:33,611 - INFO: Epoch:4/30, batch:19, train_loss:[1.88176], acc_top1:[0.25000], acc_top5:[0.81250](8.96s)

2022-10-11 14:27:33,849 - INFO: Epoch:4/30, batch:20, train_loss:[1.83391], acc_top1:[0.28125], acc_top5:[0.85938](0.24s)

2022-10-11 14:27:34,095 - INFO: Epoch:4/30, batch:21, train_loss:[1.93049], acc_top1:[0.29688], acc_top5:[0.73438](0.25s)

2022-10-11 14:27:34,333 - INFO: Epoch:4/30, batch:22, train_loss:[1.86220], acc_top1:[0.21875], acc_top5:[0.87500](0.24s)

2022-10-11 14:27:34,568 - INFO: Epoch:4/30, batch:23, train_loss:[1.89167], acc_top1:[0.21875], acc_top5:[0.85938](0.24s)

2022-10-11 14:27:34,806 - INFO: Epoch:4/30, batch:24, train_loss:[1.88335], acc_top1:[0.34375], acc_top5:[0.78125](0.24s)

2022-10-11 14:27:35,059 - INFO: [validation] Epoch:4/30, val_loss:[0.05295], val_top1:[0.34328], val_top5:[0.89552]

2022-10-11 14:27:35,059 - INFO: 最优top1测试精度:0.35821 (epoch=3)

2022-10-11 14:27:35,307 - INFO: Epoch:5/30, batch:25, train_loss:[1.81228], acc_top1:[0.28125], acc_top5:[0.84375](0.50s)

2022-10-11 14:27:35,547 - INFO: Epoch:5/30, batch:26, train_loss:[1.82617], acc_top1:[0.34375], acc_top5:[0.82812](0.24s)

2022-10-11 14:27:35,780 - INFO: Epoch:5/30, batch:27, train_loss:[1.84644], acc_top1:[0.21875], acc_top5:[0.85938](0.23s)

2022-10-11 14:27:36,023 - INFO: Epoch:5/30, batch:28, train_loss:[1.87636], acc_top1:[0.25000], acc_top5:[0.85938](0.24s)

2022-10-11 14:27:36,263 - INFO: Epoch:5/30, batch:29, train_loss:[1.73566], acc_top1:[0.31250], acc_top5:[0.89062](0.24s)

2022-10-11 14:27:36,514 - INFO: Epoch:5/30, batch:30, train_loss:[1.81004], acc_top1:[0.29688], acc_top5:[0.92188](0.25s)

2022-10-11 14:27:36,761 - INFO: [validation] Epoch:5/30, val_loss:[0.05013], val_top1:[0.26866], val_top5:[0.86567]

2022-10-11 14:27:36,762 - INFO: 最优top1测试精度:0.35821 (epoch=3)

2022-10-11 14:27:36,984 - INFO: Epoch:6/30, batch:31, train_loss:[1.76742], acc_top1:[0.42188], acc_top5:[0.82812](0.47s)

2022-10-11 14:27:37,247 - INFO: Epoch:6/30, batch:32, train_loss:[1.81308], acc_top1:[0.23438], acc_top5:[0.93750](0.26s)

2022-10-11 14:27:37,498 - INFO: Epoch:6/30, batch:33, train_loss:[1.74765], acc_top1:[0.34375], acc_top5:[0.87500](0.25s)

2022-10-11 14:27:37,724 - INFO: Epoch:6/30, batch:34, train_loss:[1.73313], acc_top1:[0.28125], acc_top5:[0.87500](0.23s)

2022-10-11 14:27:37,970 - INFO: Epoch:6/30, batch:35, train_loss:[1.88628], acc_top1:[0.29688], acc_top5:[0.84375](0.25s)

2022-10-11 14:27:38,225 - INFO: Epoch:6/30, batch:36, train_loss:[1.79765], acc_top1:[0.23438], acc_top5:[0.82812](0.25s)

2022-10-11 14:27:38,469 - INFO: [validation] Epoch:6/30, val_loss:[0.04388], val_top1:[0.32836], val_top5:[0.88060]

2022-10-11 14:27:38,470 - INFO: 最优top1测试精度:0.35821 (epoch=3)

2022-10-11 14:27:38,723 - INFO: Epoch:7/30, batch:37, train_loss:[1.72171], acc_top1:[0.39062], acc_top5:[0.90625](0.50s)

2022-10-11 14:27:38,967 - INFO: Epoch:7/30, batch:38, train_loss:[1.72064], acc_top1:[0.26562], acc_top5:[0.89062](0.24s)

2022-10-11 14:27:39,210 - INFO: Epoch:7/30, batch:39, train_loss:[1.75008], acc_top1:[0.34375], acc_top5:[0.85938](0.24s)

2022-10-11 14:27:39,453 - INFO: Epoch:7/30, batch:40, train_loss:[1.68113], acc_top1:[0.34375], acc_top5:[0.90625](0.24s)

2022-10-11 14:27:39,702 - INFO: Epoch:7/30, batch:41, train_loss:[1.64252], acc_top1:[0.42188], acc_top5:[0.92188](0.25s)

2022-10-11 14:27:39,956 - INFO: Epoch:7/30, batch:42, train_loss:[1.69408], acc_top1:[0.26562], acc_top5:[0.90625](0.25s)

2022-10-11 14:27:40,200 - INFO: [validation] Epoch:7/30, val_loss:[0.04253], val_top1:[0.40299], val_top5:[0.94030]

2022-10-11 14:27:49,581 - INFO: 已保存当前测试模型(epoch=7)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:27:49,582 - INFO: 最优top1测试精度:0.40299 (epoch=7)

2022-10-11 14:27:49,846 - INFO: Epoch:8/30, batch:43, train_loss:[1.69700], acc_top1:[0.40625], acc_top5:[0.87500](9.89s)

2022-10-11 14:27:50,096 - INFO: Epoch:8/30, batch:44, train_loss:[1.69638], acc_top1:[0.32812], acc_top5:[0.92188](0.25s)

2022-10-11 14:27:50,320 - INFO: Epoch:8/30, batch:45, train_loss:[1.71523], acc_top1:[0.32812], acc_top5:[0.89062](0.22s)

2022-10-11 14:27:50,554 - INFO: Epoch:8/30, batch:46, train_loss:[1.58588], acc_top1:[0.43750], acc_top5:[0.95312](0.23s)

2022-10-11 14:27:50,812 - INFO: Epoch:8/30, batch:47, train_loss:[1.55236], acc_top1:[0.46875], acc_top5:[0.92188](0.26s)

2022-10-11 14:27:51,036 - INFO: Epoch:8/30, batch:48, train_loss:[1.69820], acc_top1:[0.37500], acc_top5:[0.85938](0.22s)

2022-10-11 14:27:51,282 - INFO: [validation] Epoch:8/30, val_loss:[0.04368], val_top1:[0.44776], val_top5:[0.94030]

2022-10-11 14:28:00,433 - INFO: 已保存当前测试模型(epoch=8)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:28:00,433 - INFO: 最优top1测试精度:0.44776 (epoch=8)

2022-10-11 14:28:00,720 - INFO: Epoch:9/30, batch:49, train_loss:[1.53833], acc_top1:[0.45312], acc_top5:[0.90625](9.68s)

2022-10-11 14:28:00,969 - INFO: Epoch:9/30, batch:50, train_loss:[1.50505], acc_top1:[0.56250], acc_top5:[0.92188](0.25s)

2022-10-11 14:28:01,226 - INFO: Epoch:9/30, batch:51, train_loss:[1.60447], acc_top1:[0.46875], acc_top5:[0.85938](0.26s)

2022-10-11 14:28:01,444 - INFO: Epoch:9/30, batch:52, train_loss:[1.46346], acc_top1:[0.50000], acc_top5:[0.93750](0.22s)

2022-10-11 14:28:01,682 - INFO: Epoch:9/30, batch:53, train_loss:[1.59358], acc_top1:[0.31250], acc_top5:[0.89062](0.24s)

2022-10-11 14:28:01,903 - INFO: Epoch:9/30, batch:54, train_loss:[1.52010], acc_top1:[0.42188], acc_top5:[0.90625](0.22s)

2022-10-11 14:28:02,158 - INFO: [validation] Epoch:9/30, val_loss:[0.03196], val_top1:[0.47761], val_top5:[0.95522]

2022-10-11 14:28:11,116 - INFO: 已保存当前测试模型(epoch=9)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:28:11,116 - INFO: 最优top1测试精度:0.47761 (epoch=9)

2022-10-11 14:28:11,397 - INFO: Epoch:10/30, batch:55, train_loss:[1.45523], acc_top1:[0.54688], acc_top5:[0.89062](9.49s)

2022-10-11 14:28:11,649 - INFO: Epoch:10/30, batch:56, train_loss:[1.42583], acc_top1:[0.50000], acc_top5:[0.93750](0.25s)

2022-10-11 14:28:11,888 - INFO: Epoch:10/30, batch:57, train_loss:[1.33312], acc_top1:[0.53125], acc_top5:[0.96875](0.24s)

2022-10-11 14:28:12,131 - INFO: Epoch:10/30, batch:58, train_loss:[1.54871], acc_top1:[0.43750], acc_top5:[0.92188](0.24s)

2022-10-11 14:28:12,396 - INFO: Epoch:10/30, batch:59, train_loss:[1.52692], acc_top1:[0.40625], acc_top5:[0.93750](0.27s)

2022-10-11 14:28:12,637 - INFO: Epoch:10/30, batch:60, train_loss:[1.71244], acc_top1:[0.37500], acc_top5:[0.89062](0.24s)

2022-10-11 14:28:12,894 - INFO: [validation] Epoch:10/30, val_loss:[0.04470], val_top1:[0.47761], val_top5:[0.91045]

2022-10-11 14:28:12,895 - INFO: 最优top1测试精度:0.47761 (epoch=9)

2022-10-11 14:28:13,177 - INFO: Epoch:11/30, batch:61, train_loss:[1.40446], acc_top1:[0.53125], acc_top5:[0.95312](0.54s)

2022-10-11 14:28:13,438 - INFO: Epoch:11/30, batch:62, train_loss:[1.48073], acc_top1:[0.45312], acc_top5:[0.95312](0.26s)

2022-10-11 14:28:13,686 - INFO: Epoch:11/30, batch:63, train_loss:[1.36373], acc_top1:[0.46875], acc_top5:[0.93750](0.25s)

2022-10-11 14:28:13,929 - INFO: Epoch:11/30, batch:64, train_loss:[1.23771], acc_top1:[0.54688], acc_top5:[1.00000](0.24s)

2022-10-11 14:28:14,154 - INFO: Epoch:11/30, batch:65, train_loss:[1.37355], acc_top1:[0.56250], acc_top5:[0.92188](0.22s)

2022-10-11 14:28:14,390 - INFO: Epoch:11/30, batch:66, train_loss:[1.35317], acc_top1:[0.53125], acc_top5:[0.96875](0.24s)

2022-10-11 14:28:14,631 - INFO: [validation] Epoch:11/30, val_loss:[0.02569], val_top1:[0.55224], val_top5:[0.95522]

2022-10-11 14:28:23,320 - INFO: 已保存当前测试模型(epoch=11)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:28:23,322 - INFO: 最优top1测试精度:0.55224 (epoch=11)

2022-10-11 14:28:23,564 - INFO: Epoch:12/30, batch:67, train_loss:[1.43110], acc_top1:[0.45312], acc_top5:[0.92188](9.17s)

2022-10-11 14:28:23,810 - INFO: Epoch:12/30, batch:68, train_loss:[1.29885], acc_top1:[0.56250], acc_top5:[0.92188](0.24s)

2022-10-11 14:28:24,060 - INFO: Epoch:12/30, batch:69, train_loss:[1.33487], acc_top1:[0.45312], acc_top5:[0.98438](0.25s)

2022-10-11 14:28:24,293 - INFO: Epoch:12/30, batch:70, train_loss:[1.13638], acc_top1:[0.62500], acc_top5:[0.96875](0.23s)

2022-10-11 14:28:24,528 - INFO: Epoch:12/30, batch:71, train_loss:[1.18268], acc_top1:[0.56250], acc_top5:[0.98438](0.23s)

2022-10-11 14:28:24,762 - INFO: Epoch:12/30, batch:72, train_loss:[1.37893], acc_top1:[0.45312], acc_top5:[0.90625](0.23s)

2022-10-11 14:28:25,004 - INFO: [validation] Epoch:12/30, val_loss:[0.02197], val_top1:[0.59701], val_top5:[0.98507]

2022-10-11 14:28:33,729 - INFO: 已保存当前测试模型(epoch=12)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:28:33,731 - INFO: 最优top1测试精度:0.59701 (epoch=12)

2022-10-11 14:28:33,990 - INFO: Epoch:13/30, batch:73, train_loss:[1.36232], acc_top1:[0.45312], acc_top5:[0.98438](9.23s)

2022-10-11 14:28:34,236 - INFO: Epoch:13/30, batch:74, train_loss:[1.26470], acc_top1:[0.50000], acc_top5:[0.96875](0.25s)

2022-10-11 14:28:34,452 - INFO: Epoch:13/30, batch:75, train_loss:[1.22077], acc_top1:[0.57812], acc_top5:[0.95312](0.22s)

2022-10-11 14:28:34,696 - INFO: Epoch:13/30, batch:76, train_loss:[1.15869], acc_top1:[0.57812], acc_top5:[0.93750](0.24s)

2022-10-11 14:28:34,937 - INFO: Epoch:13/30, batch:77, train_loss:[1.21420], acc_top1:[0.57812], acc_top5:[0.95312](0.24s)

2022-10-11 14:28:35,170 - INFO: Epoch:13/30, batch:78, train_loss:[1.05542], acc_top1:[0.67188], acc_top5:[1.00000](0.23s)

2022-10-11 14:28:35,408 - INFO: [validation] Epoch:13/30, val_loss:[0.02157], val_top1:[0.50746], val_top5:[0.95522]

2022-10-11 14:28:35,409 - INFO: 最优top1测试精度:0.59701 (epoch=12)

2022-10-11 14:28:35,654 - INFO: Epoch:14/30, batch:79, train_loss:[1.36849], acc_top1:[0.43750], acc_top5:[0.96875](0.48s)

2022-10-11 14:28:35,893 - INFO: Epoch:14/30, batch:80, train_loss:[1.16132], acc_top1:[0.62500], acc_top5:[0.95312](0.24s)

2022-10-11 14:28:36,111 - INFO: Epoch:14/30, batch:81, train_loss:[1.18109], acc_top1:[0.60938], acc_top5:[0.95312](0.22s)

2022-10-11 14:28:36,334 - INFO: Epoch:14/30, batch:82, train_loss:[1.18723], acc_top1:[0.59375], acc_top5:[1.00000](0.22s)

2022-10-11 14:28:36,595 - INFO: Epoch:14/30, batch:83, train_loss:[1.05234], acc_top1:[0.65625], acc_top5:[0.98438](0.26s)

2022-10-11 14:28:36,834 - INFO: Epoch:14/30, batch:84, train_loss:[0.97124], acc_top1:[0.67188], acc_top5:[0.96875](0.24s)

2022-10-11 14:28:37,075 - INFO: [validation] Epoch:14/30, val_loss:[0.01835], val_top1:[0.65672], val_top5:[0.98507]

2022-10-11 14:28:46,224 - INFO: 已保存当前测试模型(epoch=14)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:28:46,225 - INFO: 最优top1测试精度:0.65672 (epoch=14)

2022-10-11 14:28:46,495 - INFO: Epoch:15/30, batch:85, train_loss:[1.16429], acc_top1:[0.54688], acc_top5:[0.98438](9.66s)

2022-10-11 14:28:46,754 - INFO: Epoch:15/30, batch:86, train_loss:[1.18765], acc_top1:[0.54688], acc_top5:[0.98438](0.26s)

2022-10-11 14:28:47,000 - INFO: Epoch:15/30, batch:87, train_loss:[1.05713], acc_top1:[0.64062], acc_top5:[0.98438](0.25s)

2022-10-11 14:28:47,220 - INFO: Epoch:15/30, batch:88, train_loss:[1.15465], acc_top1:[0.54688], acc_top5:[0.95312](0.22s)

2022-10-11 14:28:47,469 - INFO: Epoch:15/30, batch:89, train_loss:[1.10060], acc_top1:[0.67188], acc_top5:[0.98438](0.25s)

2022-10-11 14:28:47,700 - INFO: Epoch:15/30, batch:90, train_loss:[0.90586], acc_top1:[0.71875], acc_top5:[0.98438](0.23s)

2022-10-11 14:28:47,939 - INFO: [validation] Epoch:15/30, val_loss:[0.01752], val_top1:[0.62687], val_top5:[0.97015]

2022-10-11 14:28:47,939 - INFO: 最优top1测试精度:0.65672 (epoch=14)

2022-10-11 14:28:48,192 - INFO: Epoch:16/30, batch:91, train_loss:[0.85901], acc_top1:[0.65625], acc_top5:[1.00000](0.49s)

2022-10-11 14:28:48,434 - INFO: Epoch:16/30, batch:92, train_loss:[0.94025], acc_top1:[0.64062], acc_top5:[1.00000](0.24s)

2022-10-11 14:28:48,678 - INFO: Epoch:16/30, batch:93, train_loss:[0.90625], acc_top1:[0.62500], acc_top5:[0.98438](0.24s)

2022-10-11 14:28:48,915 - INFO: Epoch:16/30, batch:94, train_loss:[1.09179], acc_top1:[0.59375], acc_top5:[0.93750](0.24s)

2022-10-11 14:28:49,147 - INFO: Epoch:16/30, batch:95, train_loss:[0.96562], acc_top1:[0.62500], acc_top5:[0.98438](0.23s)

2022-10-11 14:28:49,373 - INFO: Epoch:16/30, batch:96, train_loss:[0.90630], acc_top1:[0.65625], acc_top5:[1.00000](0.23s)

2022-10-11 14:28:49,613 - INFO: [validation] Epoch:16/30, val_loss:[0.01697], val_top1:[0.67164], val_top5:[0.98507]

2022-10-11 14:28:58,114 - INFO: 已保存当前测试模型(epoch=16)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:28:58,116 - INFO: 最优top1测试精度:0.67164 (epoch=16)

2022-10-11 14:28:58,381 - INFO: Epoch:17/30, batch:97, train_loss:[0.76398], acc_top1:[0.71875], acc_top5:[1.00000](9.01s)

2022-10-11 14:28:58,635 - INFO: Epoch:17/30, batch:98, train_loss:[0.71395], acc_top1:[0.75000], acc_top5:[1.00000](0.25s)

2022-10-11 14:28:58,879 - INFO: Epoch:17/30, batch:99, train_loss:[0.94206], acc_top1:[0.59375], acc_top5:[1.00000](0.24s)

2022-10-11 14:28:59,117 - INFO: Epoch:17/30, batch:100, train_loss:[0.75781], acc_top1:[0.75000], acc_top5:[0.96875](0.24s)

2022-10-11 14:28:59,354 - INFO: Epoch:17/30, batch:101, train_loss:[0.86154], acc_top1:[0.71875], acc_top5:[0.98438](0.24s)

2022-10-11 14:28:59,590 - INFO: Epoch:17/30, batch:102, train_loss:[1.18466], acc_top1:[0.51562], acc_top5:[1.00000](0.24s)

2022-10-11 14:28:59,831 - INFO: [validation] Epoch:17/30, val_loss:[0.01415], val_top1:[0.68657], val_top5:[0.98507]

2022-10-11 14:29:08,715 - INFO: 已保存当前测试模型(epoch=17)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:29:08,716 - INFO: 最优top1测试精度:0.68657 (epoch=17)

2022-10-11 14:29:08,975 - INFO: Epoch:18/30, batch:103, train_loss:[0.66910], acc_top1:[0.76562], acc_top5:[1.00000](9.38s)

2022-10-11 14:29:09,217 - INFO: Epoch:18/30, batch:104, train_loss:[1.02072], acc_top1:[0.60938], acc_top5:[0.96875](0.24s)

2022-10-11 14:29:09,471 - INFO: Epoch:18/30, batch:105, train_loss:[0.80554], acc_top1:[0.70312], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:09,691 - INFO: Epoch:18/30, batch:106, train_loss:[0.71340], acc_top1:[0.71875], acc_top5:[0.98438](0.22s)

2022-10-11 14:29:09,942 - INFO: Epoch:18/30, batch:107, train_loss:[0.85709], acc_top1:[0.70312], acc_top5:[0.98438](0.25s)

2022-10-11 14:29:10,172 - INFO: Epoch:18/30, batch:108, train_loss:[0.74157], acc_top1:[0.67188], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:10,407 - INFO: [validation] Epoch:18/30, val_loss:[0.01535], val_top1:[0.64179], val_top5:[0.98507]

2022-10-11 14:29:10,408 - INFO: 最优top1测试精度:0.68657 (epoch=17)

2022-10-11 14:29:10,662 - INFO: Epoch:19/30, batch:109, train_loss:[0.77239], acc_top1:[0.73438], acc_top5:[0.98438](0.49s)

2022-10-11 14:29:10,901 - INFO: Epoch:19/30, batch:110, train_loss:[0.77414], acc_top1:[0.70312], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:11,145 - INFO: Epoch:19/30, batch:111, train_loss:[0.77630], acc_top1:[0.70312], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:11,371 - INFO: Epoch:19/30, batch:112, train_loss:[0.82933], acc_top1:[0.65625], acc_top5:[0.98438](0.23s)

2022-10-11 14:29:11,615 - INFO: Epoch:19/30, batch:113, train_loss:[0.87711], acc_top1:[0.68750], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:11,855 - INFO: Epoch:19/30, batch:114, train_loss:[0.66331], acc_top1:[0.75000], acc_top5:[0.98438](0.24s)

2022-10-11 14:29:12,090 - INFO: [validation] Epoch:19/30, val_loss:[0.01436], val_top1:[0.73134], val_top5:[0.98507]

2022-10-11 14:29:20,637 - INFO: 已保存当前测试模型(epoch=19)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:29:20,638 - INFO: 最优top1测试精度:0.73134 (epoch=19)

2022-10-11 14:29:20,873 - INFO: Epoch:20/30, batch:115, train_loss:[0.65336], acc_top1:[0.76562], acc_top5:[1.00000](9.02s)

2022-10-11 14:29:21,114 - INFO: Epoch:20/30, batch:116, train_loss:[0.69642], acc_top1:[0.75000], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:21,376 - INFO: Epoch:20/30, batch:117, train_loss:[0.67610], acc_top1:[0.79688], acc_top5:[1.00000](0.26s)

2022-10-11 14:29:21,618 - INFO: Epoch:20/30, batch:118, train_loss:[0.70814], acc_top1:[0.81250], acc_top5:[0.98438](0.24s)

2022-10-11 14:29:21,877 - INFO: Epoch:20/30, batch:119, train_loss:[0.63044], acc_top1:[0.76562], acc_top5:[0.98438](0.26s)

2022-10-11 14:29:22,120 - INFO: Epoch:20/30, batch:120, train_loss:[0.69328], acc_top1:[0.79688], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:22,371 - INFO: [validation] Epoch:20/30, val_loss:[0.01269], val_top1:[0.71642], val_top5:[0.98507]

2022-10-11 14:29:22,372 - INFO: 最优top1测试精度:0.73134 (epoch=19)

2022-10-11 14:29:22,656 - INFO: Epoch:21/30, batch:121, train_loss:[0.80583], acc_top1:[0.70312], acc_top5:[0.96875](0.54s)

2022-10-11 14:29:22,920 - INFO: Epoch:21/30, batch:122, train_loss:[0.59786], acc_top1:[0.79688], acc_top5:[1.00000](0.26s)

2022-10-11 14:29:23,140 - INFO: Epoch:21/30, batch:123, train_loss:[0.62411], acc_top1:[0.75000], acc_top5:[1.00000](0.22s)

2022-10-11 14:29:23,381 - INFO: Epoch:21/30, batch:124, train_loss:[0.68868], acc_top1:[0.73438], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:23,622 - INFO: Epoch:21/30, batch:125, train_loss:[0.63473], acc_top1:[0.78125], acc_top5:[0.96875](0.24s)

2022-10-11 14:29:23,828 - INFO: Epoch:21/30, batch:126, train_loss:[0.55362], acc_top1:[0.78125], acc_top5:[1.00000](0.21s)

2022-10-11 14:29:24,078 - INFO: [validation] Epoch:21/30, val_loss:[0.01130], val_top1:[0.76119], val_top5:[0.98507]

2022-10-11 14:29:33,085 - INFO: 已保存当前测试模型(epoch=21)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:29:33,086 - INFO: 最优top1测试精度:0.76119 (epoch=21)

2022-10-11 14:29:33,337 - INFO: Epoch:22/30, batch:127, train_loss:[0.61114], acc_top1:[0.78125], acc_top5:[1.00000](9.51s)

2022-10-11 14:29:33,570 - INFO: Epoch:22/30, batch:128, train_loss:[0.46089], acc_top1:[0.84375], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:33,803 - INFO: Epoch:22/30, batch:129, train_loss:[0.61836], acc_top1:[0.87500], acc_top5:[0.98438](0.23s)

2022-10-11 14:29:34,053 - INFO: Epoch:22/30, batch:130, train_loss:[0.61279], acc_top1:[0.79688], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:34,316 - INFO: Epoch:22/30, batch:131, train_loss:[0.53401], acc_top1:[0.82812], acc_top5:[1.00000](0.26s)

2022-10-11 14:29:34,553 - INFO: Epoch:22/30, batch:132, train_loss:[0.74388], acc_top1:[0.73438], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:34,798 - INFO: [validation] Epoch:22/30, val_loss:[0.01177], val_top1:[0.70149], val_top5:[1.00000]

2022-10-11 14:29:34,799 - INFO: 最优top1测试精度:0.76119 (epoch=21)

2022-10-11 14:29:35,060 - INFO: Epoch:23/30, batch:133, train_loss:[0.50972], acc_top1:[0.81250], acc_top5:[1.00000](0.51s)

2022-10-11 14:29:35,304 - INFO: Epoch:23/30, batch:134, train_loss:[0.55169], acc_top1:[0.81250], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:35,540 - INFO: Epoch:23/30, batch:135, train_loss:[0.64436], acc_top1:[0.75000], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:35,792 - INFO: Epoch:23/30, batch:136, train_loss:[0.45836], acc_top1:[0.85938], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:36,028 - INFO: Epoch:23/30, batch:137, train_loss:[0.40179], acc_top1:[0.90625], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:36,262 - INFO: Epoch:23/30, batch:138, train_loss:[0.56454], acc_top1:[0.78125], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:36,499 - INFO: [validation] Epoch:23/30, val_loss:[0.01075], val_top1:[0.79104], val_top5:[0.98507]

2022-10-11 14:29:45,508 - INFO: 已保存当前测试模型(epoch=23)为最优模型:Butterfly_Alexnet_final

2022-10-11 14:29:45,509 - INFO: 最优top1测试精度:0.79104 (epoch=23)

2022-10-11 14:29:45,753 - INFO: Epoch:24/30, batch:139, train_loss:[0.53321], acc_top1:[0.78125], acc_top5:[1.00000](9.49s)

2022-10-11 14:29:45,996 - INFO: Epoch:24/30, batch:140, train_loss:[0.42143], acc_top1:[0.89062], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:46,236 - INFO: Epoch:24/30, batch:141, train_loss:[0.43966], acc_top1:[0.84375], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:46,483 - INFO: Epoch:24/30, batch:142, train_loss:[0.44777], acc_top1:[0.84375], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:46,719 - INFO: Epoch:24/30, batch:143, train_loss:[0.38267], acc_top1:[0.87500], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:46,965 - INFO: Epoch:24/30, batch:144, train_loss:[0.47105], acc_top1:[0.82812], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:47,217 - INFO: [validation] Epoch:24/30, val_loss:[0.01046], val_top1:[0.74627], val_top5:[1.00000]

2022-10-11 14:29:47,217 - INFO: 最优top1测试精度:0.79104 (epoch=23)

2022-10-11 14:29:47,458 - INFO: Epoch:25/30, batch:145, train_loss:[0.37662], acc_top1:[0.84375], acc_top5:[1.00000](0.49s)

2022-10-11 14:29:47,694 - INFO: Epoch:25/30, batch:146, train_loss:[0.50567], acc_top1:[0.79688], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:47,937 - INFO: Epoch:25/30, batch:147, train_loss:[0.34054], acc_top1:[0.89062], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:48,180 - INFO: Epoch:25/30, batch:148, train_loss:[0.51517], acc_top1:[0.82812], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:48,431 - INFO: Epoch:25/30, batch:149, train_loss:[0.50459], acc_top1:[0.79688], acc_top5:[0.98438](0.25s)

2022-10-11 14:29:48,659 - INFO: Epoch:25/30, batch:150, train_loss:[0.32391], acc_top1:[0.85938], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:48,897 - INFO: [validation] Epoch:25/30, val_loss:[0.01206], val_top1:[0.71642], val_top5:[0.98507]

2022-10-11 14:29:48,897 - INFO: 最优top1测试精度:0.79104 (epoch=23)

2022-10-11 14:29:49,153 - INFO: Epoch:26/30, batch:151, train_loss:[0.52685], acc_top1:[0.78125], acc_top5:[1.00000](0.49s)

2022-10-11 14:29:49,394 - INFO: Epoch:26/30, batch:152, train_loss:[0.45385], acc_top1:[0.79688], acc_top5:[0.98438](0.24s)

2022-10-11 14:29:49,636 - INFO: Epoch:26/30, batch:153, train_loss:[0.49367], acc_top1:[0.82812], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:49,883 - INFO: Epoch:26/30, batch:154, train_loss:[0.64614], acc_top1:[0.76562], acc_top5:[0.98438](0.25s)

2022-10-11 14:29:50,136 - INFO: Epoch:26/30, batch:155, train_loss:[0.32946], acc_top1:[0.92188], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:50,374 - INFO: Epoch:26/30, batch:156, train_loss:[0.33618], acc_top1:[0.89062], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:50,610 - INFO: [validation] Epoch:26/30, val_loss:[0.01287], val_top1:[0.71642], val_top5:[1.00000]

2022-10-11 14:29:50,611 - INFO: 最优top1测试精度:0.79104 (epoch=23)

2022-10-11 14:29:50,873 - INFO: Epoch:27/30, batch:157, train_loss:[0.40305], acc_top1:[0.89062], acc_top5:[1.00000](0.50s)

2022-10-11 14:29:51,123 - INFO: Epoch:27/30, batch:158, train_loss:[0.40280], acc_top1:[0.84375], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:51,345 - INFO: Epoch:27/30, batch:159, train_loss:[0.33251], acc_top1:[0.89062], acc_top5:[1.00000](0.22s)

2022-10-11 14:29:51,578 - INFO: Epoch:27/30, batch:160, train_loss:[0.36853], acc_top1:[0.87500], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:51,815 - INFO: Epoch:27/30, batch:161, train_loss:[0.37527], acc_top1:[0.87500], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:52,052 - INFO: Epoch:27/30, batch:162, train_loss:[0.46566], acc_top1:[0.84375], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:52,292 - INFO: [validation] Epoch:27/30, val_loss:[0.00981], val_top1:[0.71642], val_top5:[1.00000]

2022-10-11 14:29:52,293 - INFO: 最优top1测试精度:0.79104 (epoch=23)

2022-10-11 14:29:52,551 - INFO: Epoch:28/30, batch:163, train_loss:[0.27711], acc_top1:[0.90625], acc_top5:[1.00000](0.50s)

2022-10-11 14:29:52,800 - INFO: Epoch:28/30, batch:164, train_loss:[0.22629], acc_top1:[0.93750], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:53,040 - INFO: Epoch:28/30, batch:165, train_loss:[0.52509], acc_top1:[0.79688], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:53,272 - INFO: Epoch:28/30, batch:166, train_loss:[0.40808], acc_top1:[0.87500], acc_top5:[0.98438](0.23s)

2022-10-11 14:29:53,504 - INFO: Epoch:28/30, batch:167, train_loss:[0.36783], acc_top1:[0.89062], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:53,739 - INFO: Epoch:28/30, batch:168, train_loss:[0.28188], acc_top1:[0.90625], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:53,980 - INFO: [validation] Epoch:28/30, val_loss:[0.01104], val_top1:[0.73134], val_top5:[1.00000]

2022-10-11 14:29:53,981 - INFO: 最优top1测试精度:0.79104 (epoch=23)

2022-10-11 14:29:54,236 - INFO: Epoch:29/30, batch:169, train_loss:[0.41030], acc_top1:[0.85938], acc_top5:[1.00000](0.50s)

2022-10-11 14:29:54,471 - INFO: Epoch:29/30, batch:170, train_loss:[0.20198], acc_top1:[0.96875], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:54,747 - INFO: Epoch:29/30, batch:171, train_loss:[0.22362], acc_top1:[0.95312], acc_top5:[1.00000](0.27s)

2022-10-11 14:29:54,992 - INFO: Epoch:29/30, batch:172, train_loss:[0.45420], acc_top1:[0.87500], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:55,219 - INFO: Epoch:29/30, batch:173, train_loss:[0.37256], acc_top1:[0.84375], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:55,446 - INFO: Epoch:29/30, batch:174, train_loss:[0.24015], acc_top1:[0.93750], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:55,685 - INFO: [validation] Epoch:29/30, val_loss:[0.00982], val_top1:[0.76119], val_top5:[1.00000]

2022-10-11 14:29:55,686 - INFO: 最优top1测试精度:0.79104 (epoch=23)

2022-10-11 14:29:55,938 - INFO: Epoch:30/30, batch:175, train_loss:[0.16234], acc_top1:[0.98438], acc_top5:[1.00000](0.49s)

2022-10-11 14:29:56,184 - INFO: Epoch:30/30, batch:176, train_loss:[0.31238], acc_top1:[0.87500], acc_top5:[1.00000](0.25s)

2022-10-11 14:29:56,419 - INFO: Epoch:30/30, batch:177, train_loss:[0.23737], acc_top1:[0.90625], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:56,652 - INFO: Epoch:30/30, batch:178, train_loss:[0.32260], acc_top1:[0.87500], acc_top5:[1.00000](0.23s)

2022-10-11 14:29:56,894 - INFO: Epoch:30/30, batch:179, train_loss:[0.27361], acc_top1:[0.90625], acc_top5:[1.00000](0.24s)

2022-10-11 14:29:57,149 - INFO: Epoch:30/30, batch:180, train_loss:[0.25761], acc_top1:[0.93750], acc_top5:[1.00000](0.26s)

2022-10-11 14:29:57,384 - INFO: [validation] Epoch:30/30, val_loss:[0.01110], val_top1:[0.79104], val_top5:[0.98507]

2022-10-11 14:29:57,385 - INFO: 最优top1测试精度:0.79104 (epoch=23)

2022-10-11 14:29:57,385 - INFO: 训练完成,最终性能accuracy=0.79104(epoch=23), 总耗时167.40s, 已将其保存为:Butterfly_Alexnet_final

2022-10-11 14:29:57,386 - INFO: 训练完毕,结果路径D:\Workspace\ExpResults\Project011AlexNetButterfly.

2022-10-11 14:29:57,386 - INFO: Done.

训练完成后,建议将 ExpResults 文件夹的最终文件 copy 到 ExpDeployments 用于进行部署和应用。

3.5 离线测试

Paddle 2.0+的离线测试也抛弃了fluid方法。基本流程和训练是一致。

from paddle.static import InputSpec if __name__ == '__main__': # 设置输入样本的维度 input_spec = InputSpec(shape=[None] + args['input_size'], dtype='float32', name='image') label_spec = InputSpec(shape=[None, 1], dtype='int64', name='label') # 载入模型 network = AlexNet(num_classes=args['class_dim']) model = paddle.Model(network, input_spec, label_spec) # 模型实例化 model.load(deployment_checkpoint_path) # 载入调优模型的参数 model.prepare(loss=paddle.nn.CrossEntropyLoss(), # 设置loss metrics=paddle.metric.Accuracy(topk=(1,5))) # 设置评价指标 # 执行评估函数,并输出验证集样本的损失和精度 print('开始评估...') avg_loss, avg_acc_top1, avg_acc_top5 = eval(model, val_reader(), verbose=1) print('\r [验证集] 损失: {:.5f}, top1精度:{:.5f}, top5精度为:{:.5f} \n'.format(avg_loss, avg_acc_top1, avg_acc_top5), end='') avg_loss, avg_acc_top1, avg_acc_top5 = eval(model, test_reader(), verbose=1) print('\r [测试集] 损失: {:.5f}, top1精度:{:.5f}, top5精度为:{:.5f}'.format(avg_loss, avg_acc_top1, avg_acc_top5), end='')

开始评估...

[验证集] 损失: 0.04095, top1精度:0.53731, top5精度为:0.89552

[测试集] 损失: 0.03296, top1精度:0.44961, top5精度为:0.89922

【结果分析】

需要注意的是此处的精度与训练过程中输出的测试精度是不相同的,因为训练过程中使用的是验证集, 而这里的离线测试使用的是测试集.

【实验四】 模型推理和预测(应用)

实验摘要: 对训练过的模型,我们通过测试集进行模型效果评估,并可以在实际场景中进行预测,查看模型的效果。

实验目的:

- 学会使用部署和推理模型进行测试

- 学会对测试样本使用

基本预处理方法和十重切割对样本进行预处理 - 对于测试样本,能够实现批量测试test()和单样本推理predict()

4.1 导入依赖库及全局参数配置

Q9:完成下列推理部分的初始参数配置(10分) ([Your codes 19])

# 导入依赖库 import numpy as np import random import os import cv2 import json import matplotlib.pyplot as plt import paddle import paddle.nn.functional as F # Q9:完成下列推理部分的初始参数配置 # [Your codes 19] args={ 'project_name': 'Project011AlexNetButterfly', 'dataset_name': 'Butterfly', 'architecture': 'Alexnet', 'input_size': [227, 227, 3], 'mean_value': [0.485, 0.456, 0.406], # Imagenet均值 'std_value': [0.229, 0.224, 0.225], # Imagenet标准差 'dataset_root_path': 'D:\\Workspace\\ExpDatasets\\', 'deployment_root_path': 'D:\\Workspace\\ExpDeployments\\', } model_name = args['dataset_name'] + '_' + args['architecture'] deployment_final_models_path = os.path.join(args['deployment_root_path'], args['project_name'], 'final_models', model_name + '_final') dataset_root_path = os.path.join(args['dataset_root_path'], args['dataset_name']) json_dataset_info = os.path.join(dataset_root_path, 'dataset_info.json')

4.2 定义推理时的预处理函数

在预测之前,通常需要对图像进行预处理。此处的预处理方案和训练模型时所使用的预处理方案必须是一致的。对于彩色图,首先需要将图像resize为模型的输入尺度,其次需要将模型通道进行调整,转化[C,W,H]为[H,W,C],最后将像素色彩值归一化为[0,1]. 此外,按照Alexnet的基本要求,对于测试数据还需要进行十重切割,并在预测时求每个切片的预测结果的平均值作为最终的准确率。

import paddle import paddle.vision.transforms as T # 2. 用于测试的十重切割 def TenCrop(img, crop_size=args['input_size'][0]): # input_data: Height x Width x Channel img_size = 256 img = T.functional.resize(img, (img_size, img_size)) data = np.zeros([10, crop_size, crop_size, 3], dtype=np.uint8) # 获取左上、右上、左下、右下、中央,及其对应的翻转,共计10个样本 data[0] = T.functional.crop(img,0,0,crop_size,crop_size) data[1] = T.functional.crop(img,0,img_size-crop_size,crop_size,crop_size) data[2] = T.functional.crop(img,img_size-crop_size,0,crop_size,crop_size) data[3] = T.functional.crop(img,img_size-crop_size,img_size-crop_size,crop_size,crop_size) data[4] = T.functional.center_crop(img, crop_size) data[5] = T.functional.hflip(data[0, :, :, :]) data[6] = T.functional.hflip(data[1, :, :, :]) data[7] = T.functional.hflip(data[2, :, :, :]) data[8] = T.functional.hflip(data[3, :, :, :]) data[9] = T.functional.hflip(data[4, :, :, :]) return data # 3. 对于单幅图片(十重切割)所使用的数据预处理,包括均值消除,尺度变换 def SimplePreprocessing(image, input_size = args['input_size'][0:2], isTenCrop = True): image = cv2.resize(image, input_size) image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) transform = T.Compose([ T.ToTensor(), T.Normalize(mean=args['mean_value'], std=args['std_value']) ]) if isTenCrop: fake_data = np.zeros([10, 3, input_size[0], input_size[1]], dtype=np.float32) fake_blob = TenCrop(image) for i in range(10): fake_data[i] = transform(fake_blob[i]).numpy() else: fake_data = transform(image) return fake_data ############################################################## # 测试输入数据类:分别输出进行预处理和未进行预处理的数据形态和例图 if __name__ == "__main__": img_path = 'D:\\Workspace\\ExpDatasets\\Butterfly\\Data\\zebra\\zeb033.jpg' img0 = cv2.imread(img_path, 1) img1 = SimplePreprocessing(img0, isTenCrop=False) img2 = SimplePreprocessing(img0, isTenCrop=True) print('原始图像的形态为: {}'.format(img0.shape)) print('简单预处理后(经过十重切割后): {}'.format(img1.shape)) print('简单预处理后(未经过十重切割后) {}'.format(img2.shape)) img1_show = img1.transpose((1, 2, 0)) img2_show = img2[0].transpose((1, 2, 0)) plt.figure(figsize=(18, 6)) ax0 = plt.subplot(1,3,1) ax0.set_title('img0') plt.imshow(img0) ax1 = plt.subplot(1,3,2) ax1.set_title('img1_show') plt.imshow(img1_show) ax2 = plt.subplot(1,3,3) ax2.set_title('img2_show') plt.imshow(img2_show) plt.show()

原始图像的形态为: (390, 500, 3)

简单预处理后(经过十重切割后): [3, 227, 227]

简单预处理后(未经过十重切割后) (10, 3, 227, 227)

4.3 数据推理

数据推理部分包含两个函数:test() 和 predict()。前者用于计算所有测试样本的准确度,后者用于对单个样本进行推理。在进行单样本推理时,我们可以从测试集中随机选一个样本进行测试,也可以指定样本的路径。在实际应用中,通常是需要给出实际待测样本的。

Q10:补全下列模型推理代码(10分) ([Your codes 20])

################################################# # 修改者: Xinyu Ou (http://ouxinyu.cn) # 功能: 使用部署模型对测试集进行评估 # 基本功能: # 1. 使用部署模型在测试集上进行批量预测,并输出预测结果 # 2. 使用部署模型在测试集上进行单样本预测,并对预测结果和真实结果进行对比 ################################################# # 1. 使用部署模型在测试集上进行单样本预测 def predict(model, image): isTenCrop = False image = SimplePreprocessing(image, isTenCrop=isTenCrop) print(image.shape) if isTenCrop: logits = model(image) pred = F.softmax(logits) pred = np.mean(pred.numpy(), axis=0) else: image = paddle.unsqueeze(image, axis=0) logits = model(image) pred = F.softmax(logits) pred_id = np.argmax(pred) return pred_id ############################################################## if __name__ == '__main__': # Q10:补全下列推理代码 # [Your codes 20] # 0. 载入模型 model = paddle.jit.load(deployment_final_models_path) # 1. 计算测试集的准确度 # test(model, test_reader()) # 2. 输出单个样本测试结果 # 2.1 获取待预测样本的标签信息 with open(json_dataset_info, 'r') as f_info: dataset_info = json.load(f_info) # 2.2 从测试列表中随机选择一副图像 test_list = os.path.join(dataset_root_path, 'test.txt') with open(test_list, 'r') as f_test: lines = f_test.readlines() line = random.choice(lines) img_path, label = line.split() img_path = os.path.join(dataset_root_path, 'Data', img_path) # img_path = 'D:\\Workspace\\ExpDatasets\\Butterfly\\Data\\zebra\\zeb033.jpg' image = cv2.imread(img_path, 1) # 2.4 给出待测样本的类别 pred_id = predict(model, image) # # 将预测的label和ground_turth label转换为label name label_name_gt = dataset_info['label_dict'][str(label)] label_name_pred = dataset_info['label_dict'][str(pred_id)] print('待测样本的类别为:{}, 预测类别为:{}'.format(label_name_gt, label_name_pred)) # 2.5 显示待预测样本 image_rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) plt.imshow(image_rgb) plt.show()

[3, 227, 227]

待测样本的类别为:admiral, 预测类别为:admiral